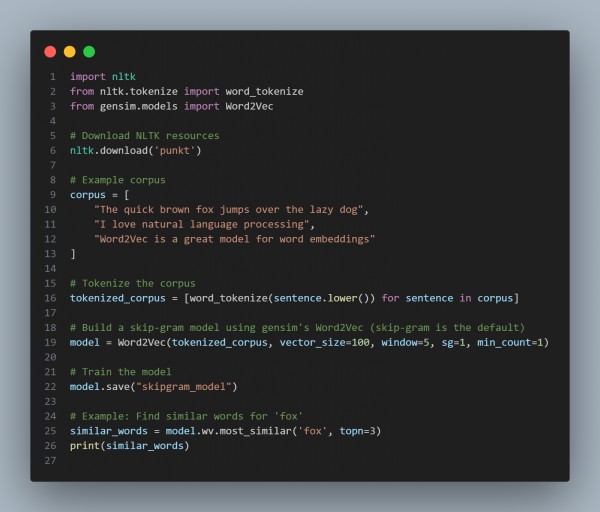

To build a skip-gram model pipeline using NLTK utilities, you can use the nltk package for tokenization and preprocessing and then apply the Word2Vec model from gensim (since NLTK does not have a native skip-gram implementation). Here is the code reference you can refer to:

In the above code we are using the following key strategies:

- Tokenization: Using word_tokenize from NLTK to tokenize the text.

- Word2Vec: Using gensim's Word2Vec model to create a skip-gram model (sg=1 for skip-gram).

- Training: The model learns word embeddings based on the context of words in the sentences.

- Similarity: You can query the model for similar words (e.g., for the word "fox").

The output of the above code would be:

Hence, this pipeline preprocesses text, builds the skip-gram model using Word2Vec from gensim, and retrieves similar words.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP