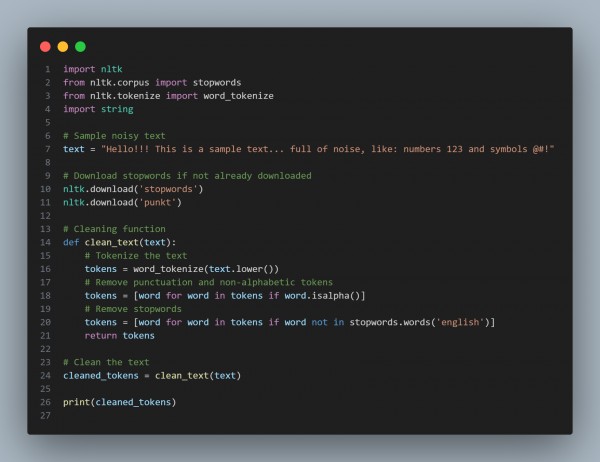

To clean noisy text data for training generative models using NLTK, you can remove stopwords, punctuation, and non-alphanumeric characters and tokenize the text. Here is the code reference you can refer to:

In the above code, we are using the following:

- word_tokenize: Breaks text into tokens (words and punctuation).

- Lowercasing: Converts text to lowercase for uniformity.

- Remove non-alphabetic tokens: Filters out numbers and symbols using isalpha().

- Remove stopwords: Eliminates common words like "is", "and", "the" using the stopwords.words() list.

Hence by referring to above you can clean noisy text data for training generative models with NLTK filters.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP