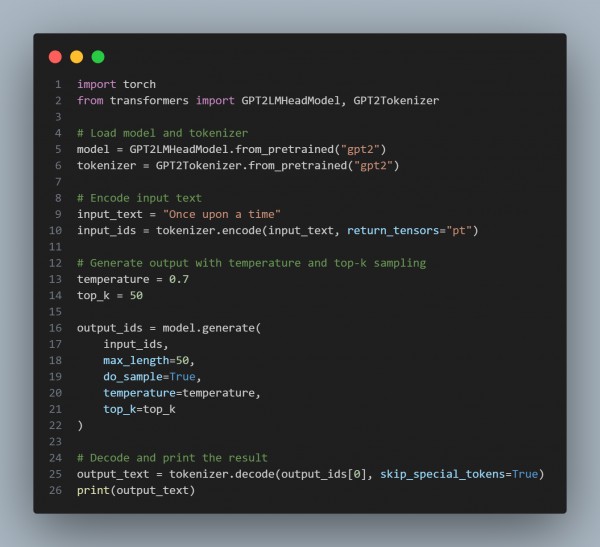

Best way to implement temperature and top-k sampling for controlled text generation in GPT-based models is to adjust the logits before sampling. Here is the code showing how:

In the code above, the key parameter is Temperature control randomness. Lower values are deterministic, and higher values are diverse and Top-k, which Limits sampling to the top k highest-probability tokens.

Hence, by referring to the above code, you can implement temperature and top-k sampling in GPT-based models for controlled generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP