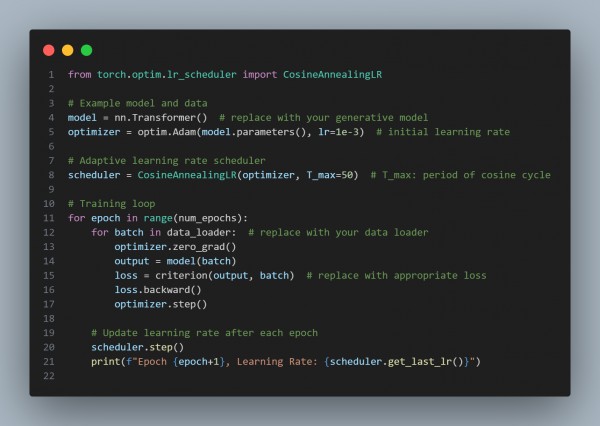

In order to implement an adaptive learning rate for large generative models, you can refer to the following:

In the code above, adaptive optimizers like Adam with learning rate schedules are applied using Pytorch.

- Adam: it is used to adjust the learning rate per parameter.

- Cosine annealingLR: Helping to avoid overshooting as training progresses.

By using above code technique you can implement an adaptive learning rate for large generative models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP