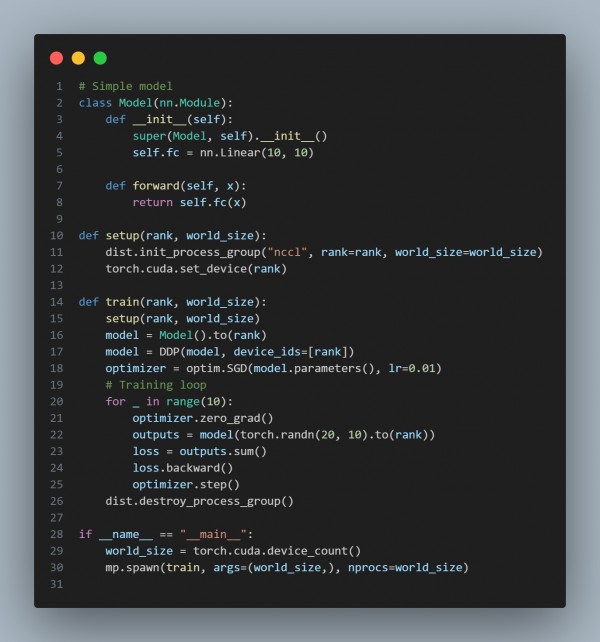

You can scale up the generative AI model across multiple GPUs or distributed environments by referring to the code snippet below:

In this code, model training is scaled across multiple GPUs using Distributed Data parallel in PyTorch by:

Setup Function: Initialize a distributed process group and assign each GPU by rank to process for parallel training.

Train Function:

- Calls are set up to configure the process group and set the GPU device.

- Wraps the model with DDP, which synchronizes gradient across processes during backpropagation.

- Runs a training loop where each process computes gradients and updates the model in sync with others.

In the main block, we retrieve the number of GPUs available(Word_size). We use torch.multiprocessing.spawn will launch multiple processes, each assigned to a GPU, to execute the train function.

Related Post: model training across multiple GPUs

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP