In your docker run command, set a specific number of CPU cores to use directly by the container. This helps in controlling resources, particularly with shared environments or using multiple containers at once.

Configure Docker for Specific Use of CPU Cores

Using the --cpus Option: The flag --cpus limits a container to only use the specified number of CPU cores. This is the simplest way to limit CPU usage. For example:

In this example, the container may use up to 2 CPU cores. This setup is best suited for isolation of high-CPU workloads without the need for monopolizing the host system's CPU.

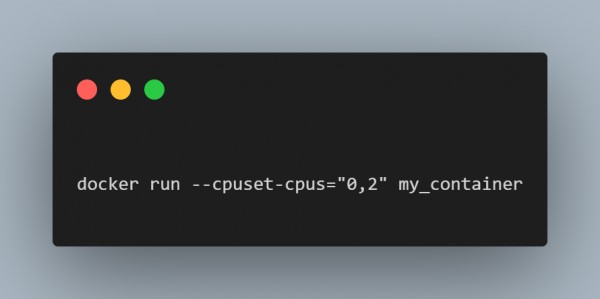

Using CPU Affinity with the flag --cpuset-cpus: This is one case where you use the -cpuset-cpus flag to limit a container by specifying core IDs on various fine grained control.

Here, the container can only use cores 0 and 2. This is particularly useful when you want to reserve specific cores for specific tasks, such as high-priority workloads or dedicated compute tasks.

Using CPU Shares for Relative Prioritization: For cases where you need relative CPU prioritization between containers, --cpu-shares allows you to assign a relative weight:

Containers with higher --cpu-shares values get more shares of CPU cycles when they compete for CPU resources. It does not limit on a specific core but would allocate fairly to containers using the same host.

Considerations

Resource Planning: Before even placing the limits on CPUs, analyze your workload demands in order to avoid mis-allocation that may actually lower performance.

Scaling in Orchestrated Environments: This is achieved, in Kubernetes, by making similar configurations on a pod's resource specification via resources.requests and resources.limits. Therefore, such an environment still enjoys CPU isolation when scaling within a cluster in order to preserve efficiency.

Putting these options together helps control the usage of the CPUs in Docker containers to properly allocate resources as well as prevent any given container from utilizing all the CPUs in the host.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP