Techniques and Code Snippets to Accelerate Generative Model Inference Time

Accelerating Inference Time

Model Quantization:

- Reduce model size by converting weights from float32 to int8.

Batch Processing:

- Process multiple inputs at once to utilize computational resources effectively.

Use Efficient Libraries:

- Leverage libraries like ONNX Runtime for optimized execution.

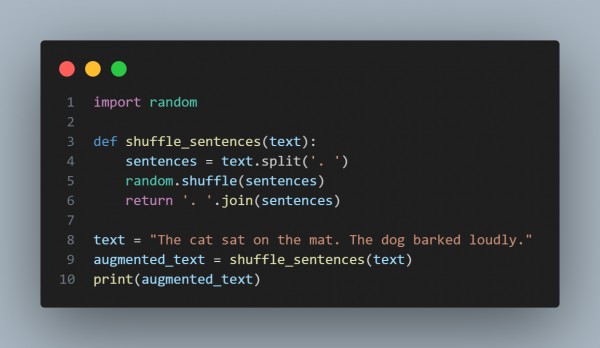

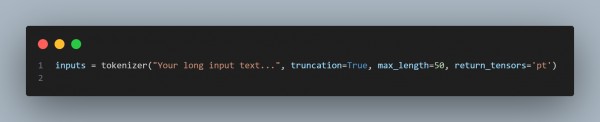

Reduce Input Size:

- Truncate inputs to minimize processing time.

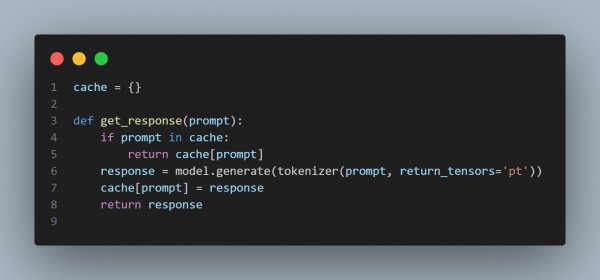

Caching Responses:

- Cache frequent queries to avoid recomputation.

Our Prompt Engineering Certification validates expertise in crafting and managing AI-driven prompts.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP