Though small variations in the wording of prompts can dramatically affect the fairness and potential bias within results generated by a model, the phrasing of a prompt is a key component that influences model output. Here's how phrasing the prompt influences bias and how that bias could be offset with code examples.

How Prompt Phrasing Impacts Bias and Fairness?

- Implicit Bias: Words or phrases may carry associations in the language model, which can cause it to produce biased or stereotypical responses. For example, if you ask the model, "What are common characteristics of group X?", it will probably produce stereotypes associated with that group.

- Ambiguity Sensitivity: Vague or ambiguous prompts may make the model "guess" what the user wants, thus reflecting biases in the training data.

- Directional Prompts: Phrasing that suggests a response or tends towards a particular opinion may influence the model to generate biased outputs.

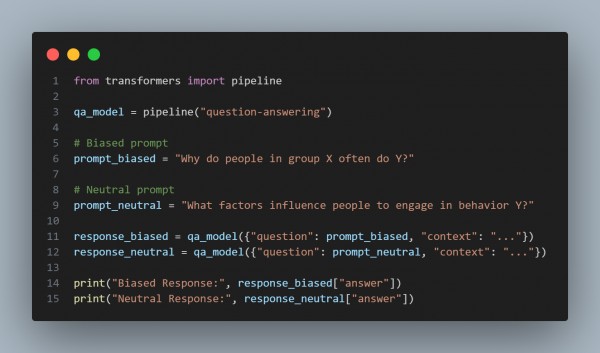

Here are some methods , along with code snippets , will be helpful in reducing the effects of prompt phrasing on bias.

1. Redraft Prompts to be Neutral

Try to use objective and neutral phrasing, with leading language or biased terms avoided. Example, reducing the directional bias of the question:

By rephrasing, we avoid leading the model toward possibly biased assumptions of "group X."

2. Use Multiple Prompts and Aggregate Responses

It is by generating responses from various prompts and then aggregating them that dependence on the bias of any single prompt is reduced.

3. Use Prompt Templates with Control Variables

If the model allows, try using control variables or templates that help mitigate bias by setting constraints on output style or tone. This works well in OpenAI's models with system messages.

To reduce the impact of wording the prompt on bias and fairness, consider the following:

- Utilize neutral prompts reworded in a way to avoid stereotype activation.

- Aggregate responses from multiple versions of the prompt for a balanced answer.

- Utilize system-level instructions that enforce impartiality.

- Sensitivity testing of consistency across different prompts

- Quantify bias through the use of bias detection tools if required.

Implementing these will reduce prompt-induced bias to a fairer and more consistent response in your system. Sound good? Feel free to let me know if you need further assistance!

Our Prompt Engineer Course explores the skills required to interact with generative AI systems.

Related Post: How to avoid ambiguous prompts

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP