To mitigate hallucinations in AI-generated customer support content, use retrieval-augmented generation (RAG) to ground responses in factual data and enforce strict response validation.

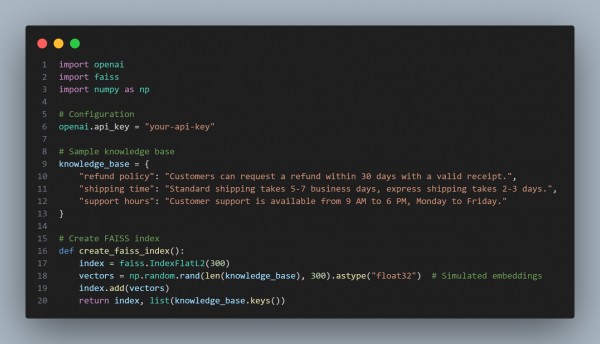

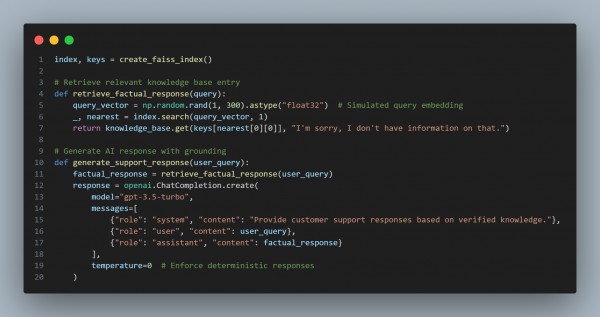

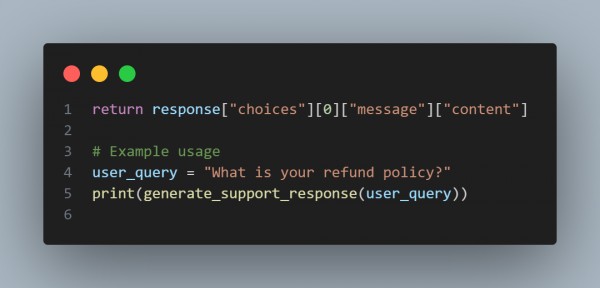

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Retrieval-Augmented Generation (RAG) – Retrieves relevant factual data before AI generation.

- Vector Search with FAISS – Matches user queries to the closest knowledge base entry.

- Grounded AI Responses – Incorporates retrieved facts into AI-generated responses.

- Low-Temperature Setting – Uses temperature=0 to enforce deterministic, non-speculative outputs.

- Preventing Misinformation – Ensures AI does not generate unsupported claims by always referencing factual sources.

Hence, mitigating hallucinations in AI-generated customer support automation is best achieved through retrieval-augmented generation (RAG), grounding responses in a structured knowledge base, and enforcing deterministic output settings.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP