To improve text generation quality with smaller datasets in GPT-3 fine-tuning, use data augmentation, prompt engineering, and few-shot learning to maximize model performance with limited data.

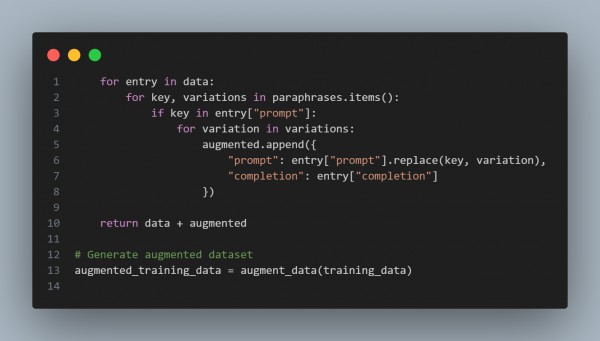

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Data Augmentation – Expands a small dataset by paraphrasing prompts to enhance model training.

- Few-Shot Learning – Uses a structured approach to train GPT-3 efficiently with limited data.

- Prompt Engineering – Ensures variations in phrasing improve generalization.

- Fine-Tuning API Integration – Automates dataset upload for GPT-3 fine-tuning via OpenAI's API.

- Scalability – Easily extendable by adding more augmentation techniques like synonym replacement or GPT-based rewording.

Hence, improving text generation quality with smaller datasets in GPT-3 fine-tuning can be achieved through data augmentation, prompt variation, and structured fine-tuning, leading to better generalization and accuracy.

Related Post: How to optimize hyperparameters for fine-tuning GPT-3/4

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP