To deploy a Large Language Model (LLM) using Amazon SageMaker and LangChain, use SageMaker's inference endpoint and integrate it into LangChain for scalability and seamless interaction.

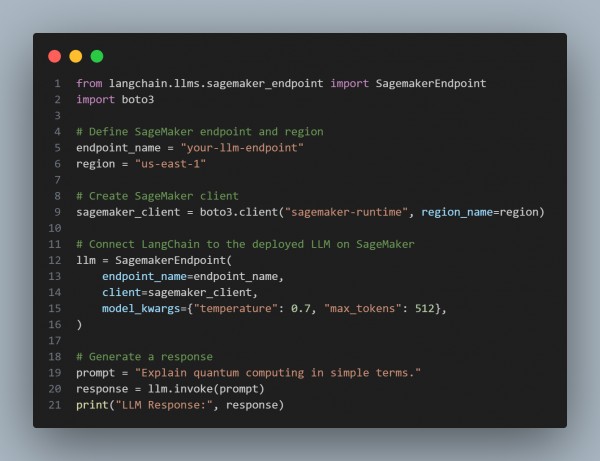

Here is the code snippet you can refer to:

In the above code we are using the following points:

- Deploys an LLM on Amazon SageMaker: Uses a pre-trained model or a custom fine-tuned model.

- Seamless Integration with LangChain: Connects SageMaker endpoints as an LLM source.

- AWS Boto3 for API Calls: Uses boto3.client("sagemaker-runtime") to interact with SageMaker.

- Supports Custom Model Parameters: Configures model settings like temperature and max_tokens for fine-tuned responses.

- Ensures Scalability: SageMaker auto-scales based on request volume.

Hence, deploying an LLM using SageMaker and LangChain provides a scalable, fully managed, and seamlessly integrated solution for real-world applications.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP