GPT-2 conversion to TensorFlow Lite (TFLite) can fail due to unsupported operations, model size limitations, or conversion errors.

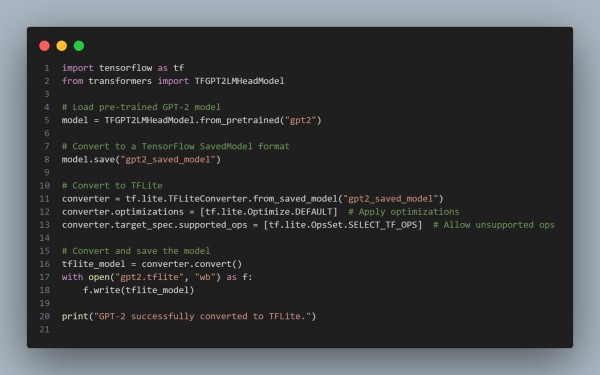

Here is the code snippet you can refer to:

In the above code we are using the following points:

- Loads GPT-2 using Hugging Face Transformers: Converts the model to TensorFlow format first.

- Uses TensorFlow Lite Converter: Applies optimizations for smaller model size.

- Handles Unsupported Operations: Uses tf.lite.OpsSet.SELECT_TF_OPS to allow TensorFlow ops in TFLite.

- Reduces Model Size: Enables tf.lite.Optimize.DEFAULT to optimize for mobile/embedded deployment.

Hence, GPT-2 TFLite conversion fails mainly due to unsupported operations and size constraints, which can be resolved by using TensorFlow ops support, applying optimizations, and reducing model complexity.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP