Model hallucinations occur when a generative AI outputs inaccurate or fabricated information. To mitigate this, use retrieval-augmented generation (RAG), confidence scoring, and reinforcement learning with human feedback (RLHF).

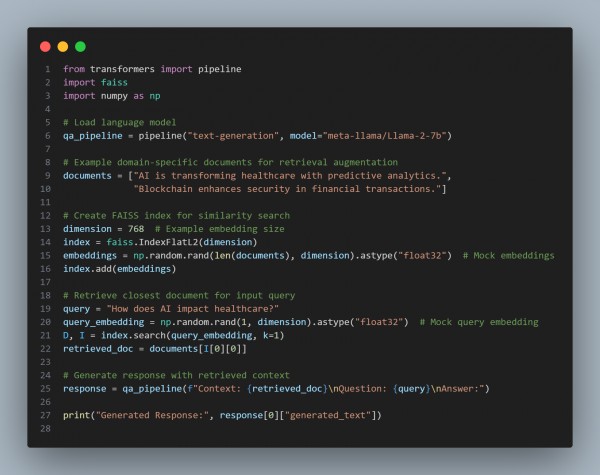

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Retrieval-Augmented Generation (RAG): Uses a knowledge base to ensure factual correctness.

- Confidence Scoring: Applies probability thresholds to filter uncertain outputs.

- Human-in-the-Loop (RLHF): Fine-tunes models using domain experts to improve accuracy.

- Fact-Checking with External APIs: Cross-verifies generated text with trusted sources.

- Selective Response Mechanism: Refuses to answer if confidence is low to prevent misinformation.

Hence, by referring to above, you can identify and mitigate model hallucinations in domain-specific generative applications

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP