To avoid hallucination issues in generative AI systems,you can follow the following steps:

- Use Ground-Truth Data: Ensure the model is trained on high-quality, accurate, and well-labeled data.

- Use Regularization: Apply techniques like attention mechanisms or semantic loss to encourage the model to focus on relevant features and facts.

- Incorporate External Knowledge: Include domain-specific knowledge (e.g., databases, ontologies) to anchor the model's outputs in reality.

- Temperature Control: Use lower temperature sampling to reduce randomness and prevent the model from generating irrelevant or fabricated content.

- Post-Processing Checks: Implement fact-checking mechanisms or post-processing rules to validate generated content.

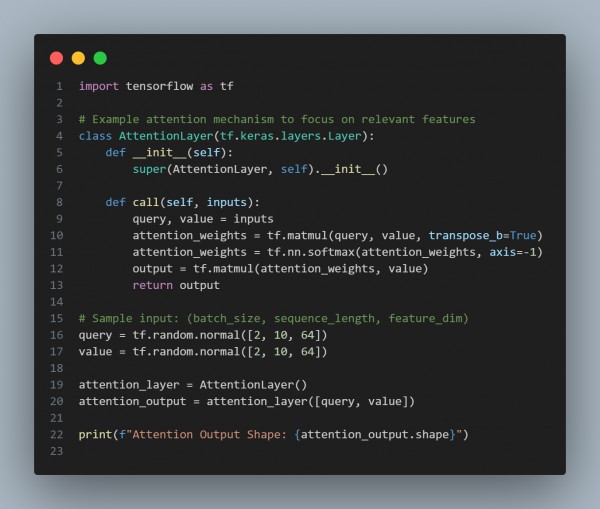

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Ground-Truth Data: Minimizes the risk of hallucinations.

- Regularization: Attention mechanisms help the model focus on relevant information.

- Fact-Checking: Post-processing can prevent inaccuracies in generated responses.

Hence, these steps help ensure that the outputs are factually accurate and reduce the likelihood of hallucinations.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP