You can address the issue of the generator not learning during GAN training by following the approaches given below:

- Improve Loss Functions: Use a stable loss like Wasserstein Loss.

- Balance Training: Ensure the discriminator doesn't overpower the generator.

- Learning Rate Adjustments: Use separate learning rates for the generator and discriminator.

- Gradient Clipping: Prevent exploding gradients.

- Label Smoothing: Apply slight noise to discriminator labels.

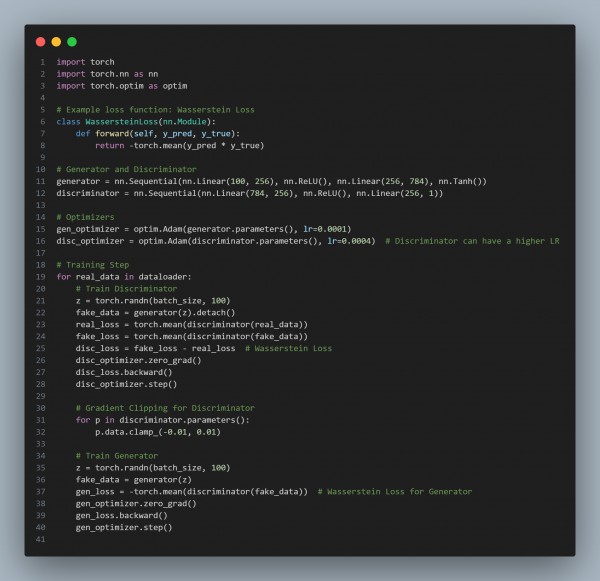

In the above code, we are using the following strategies:

- Wasserstein Loss: Stabilizes training by avoiding vanishing gradients.

- Separate Learning Rates: Faster learning for discriminator.

- Gradient Clipping: Keeps discriminator weights bounded to stabilize training.

Hence, by referring to the above, you can address the issue of the generator not learning during GAN training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP