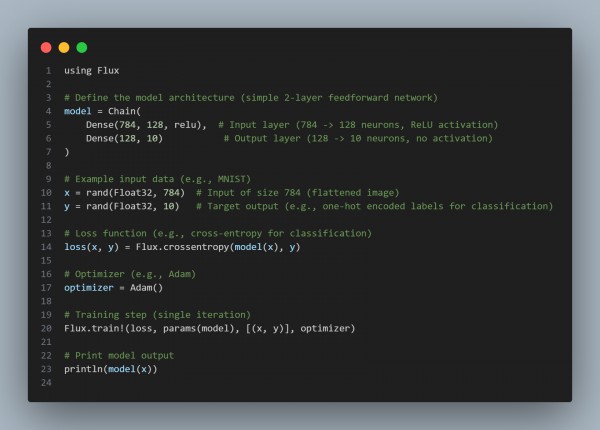

To implement a basic feedforward neural network in Julia using Flux.jl, Here is the code snippet you can refer to:

In the above code, we are using the following:

- Define the Model: Use Chain to define layers. Dense represents fully connected layers with specified input, output dimensions, and activation functions.

- Define Loss: Use a loss function like Flux.crossentropy for classification tasks.

- Optimizer: Use an optimizer (e.g., Adam or SGD) for training.

- Training: Use Flux.train!() to update the model's parameters based on input data and target labels.

Hence, this is a simple setup for a feedforward neural network for tasks like image classification. You can extend it by adding more layers, dropout, etc.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP