Microsoft Azure Data Engineering Training Cou ...

- 15k Enrolled Learners

- Weekend

- Live Class

Java is an effective programming language in Software development and Scala is the dominant programming used in big-data development. The collaboration of both can yield a powerful combination. In this Spark Java tutorial, we shall work with Spark programs in Java environment. I have lined up the docket for our topic as below.

In simple terms, Spark-Java is a combined programming approach to Big-data problems. Spark is written in Java and Scala uses JVM to compile codes written in Scala. Spark supports many programming languages like Pig, Hive, Scala and many more. Scala is one of the most prominent programming languages ever built for Spark applications.

Majority of the software developers feel comfortable working with Java at an enterprise level where they hardly prefer Scala or any such other type of languages. Spark-Java is one such approach where the software developers can run all the Scala programs and applications in the Java environment with ease.

Majority of the software developers feel comfortable working with Java at an enterprise level where they hardly prefer Scala or any such other type of languages. Spark-Java is one such approach where the software developers can run all the Scala programs and applications in the Java environment with ease.

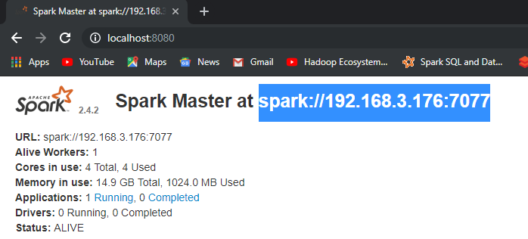

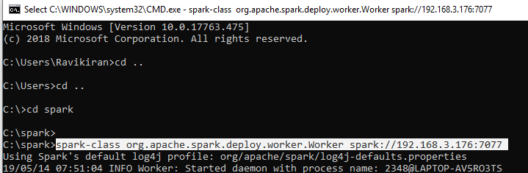

Now we have a brief understanding of Spark Java, Let us now move on to our next stage where we shall learn about setting up the environment for Spark Java. I have lined up the procedure in the form of steps.

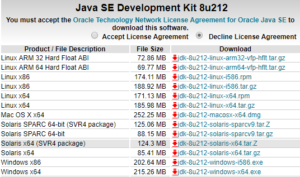

Step 1:

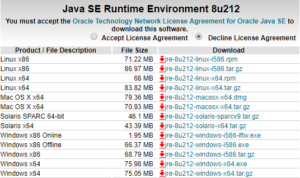

Step 2:

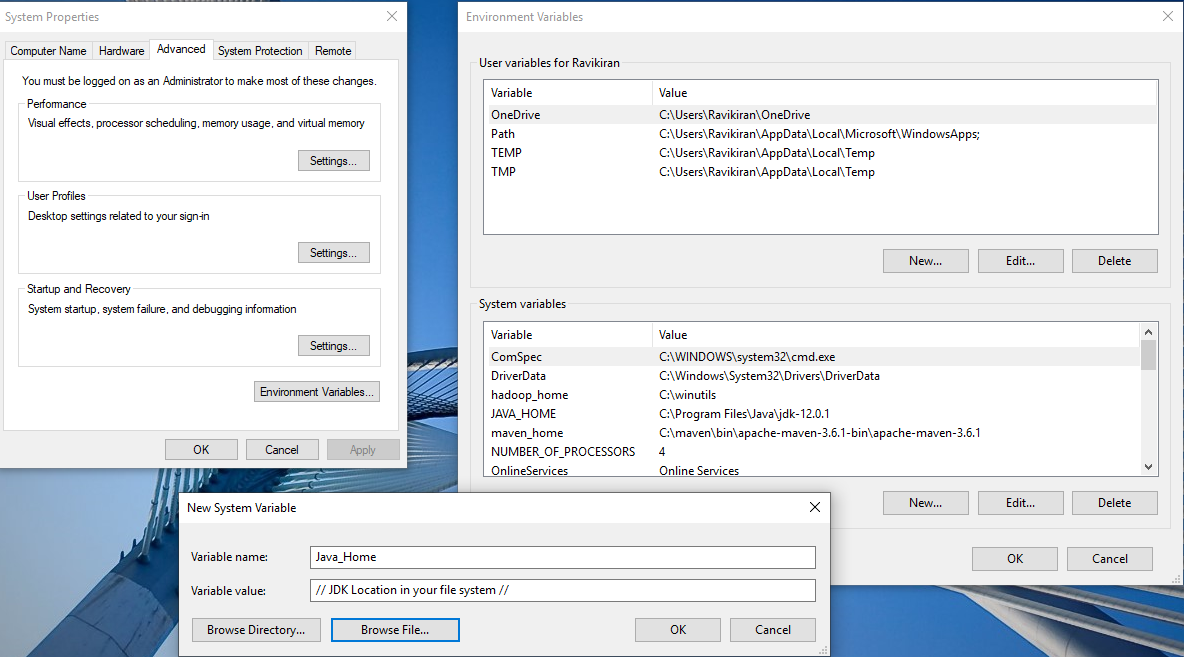

Step 3:

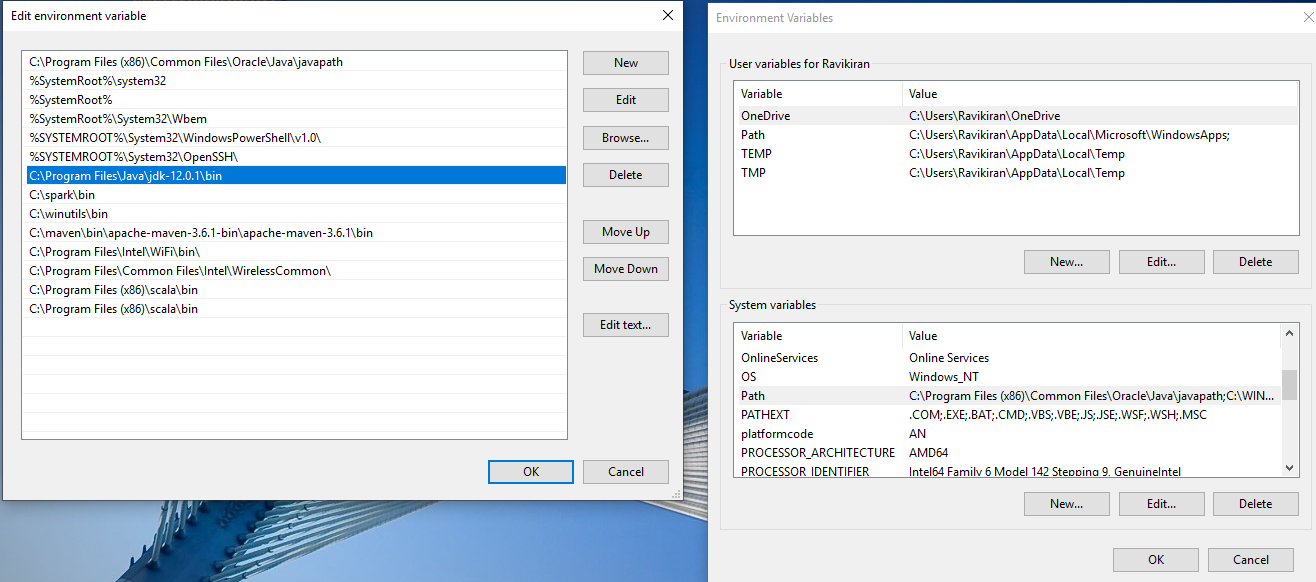

Step 4:

Step 5:

Step 6:

Step 7:

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Redefine your data analytics workflow and unleash the true potential of big data with Pyspark Course.

Now you are set with all the requirements to run Apache Spark on Java. Let us try an example of a Spark program in Java.

Examples in Spark-Java

Before we get started with actually executing a Spark example program in a Java environment, we need to achieve some prerequisites which I’ll mention below as steps for better understanding of the procedure.

Step 1:

Step 2:

Step 3:

Step 4:

Step 5:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>Edureka</groupId>

<artifactId>ScalaExample</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>2.4.2</version>

</dependency>

</dependencies>

</project>

Step 6:

package ScalaExample

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql._

import org.apache.spark.sql.types.{StructType, StructField, StringType, IntegerType};

object EdurekaApp {

def main(args: Array[String]) {

val logFile = "C:/spark/README.md" // Should be some file on your system

val conf = new SparkConf().setAppName("EdurekaApp").setMaster("local[*]")

val sc = new SparkContext(conf)

val spark = SparkSession.builder.appName("Simple Application").getOrCreate()

val logData = spark.read.textFile(logFile).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println(s"Lines with a: $numAs, Lines with b: $numBs")

spark.stop()

}

}

Output:

Lines with a: 62, Lines with b: 31

Now that we have a brief understanding of Spark Java, Let us move into our use case on Students academic performance so as to learn Spark Java in a much better way.

Similar to our previous example Let us set up our prerequisites and then, we shall begin with our Use Case. Our use case will about Students performance in the examinations conducted on a few important subjects.

This is how our code looks like, now let us perform one by one operation upon our use case.

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>ScalaExample3</groupId>

<artifactId>Edureka3</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>2.4.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-sql -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.12</artifactId>

<version>2.4.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.databricks/spark-csv -->

<dependency>

<groupId>com.databricks</groupId>

<artifactId>spark-csv_2.11</artifactId>

<version>1.5.0</version>

</dependency>

</dependencies>

</project>

package ScalaExample

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql._

import org.apache.spark.sql.types.{StructType, StructField, StringType, IntegerType};

object EdurekaApp {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("EdurekaApp3").setMaster("local[*]")

val sc = new SparkContext(conf)</pre>

val sqlContext = new SQLContext(sc)

val spark = SparkSession.builder.appName("Simple Application").getOrCreate()

val customizedSchema = StructType(Array(StructField("gender", StringType, true),StructField("race", StringType, true),StructField("parentalLevelOfEducation", StringType, true),StructField("lunch", StringType, true),StructField("testPreparationCourse", StringType, true),StructField("mathScore", IntegerType, true),StructField("readingScore", IntegerType, true),StructField("writingScore", IntegerType, true)))

val pathToFile = "C:/Users/Ravikiran/Downloads/students-performance-in-exams/StudentsPerformance.csv"

val DF = sqlContext.read.format("com.databricks.spark.csv").option("header", "true").schema(customizedSchema).load(pathToFile)

print("We are starting from here...!")

DF.rdd.cache()

DF.rdd.foreach(println)

println(DF.printSchema)

DF.registerTempTable("Student")

sqlContext.sql("SELECT * FROM Student").show()

sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore FROM Student WHERE mathScore > 75").show()

sqlContext.sql("SELECT race, count(race) FROM Student GROUP BY race").show()

sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore FROM Student").filter("readingScore>90").show()

sqlContext.sql("SELECT race, parentalLevelOfEducation FROM Student").distinct.show()

sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore FROM Student WHERE mathScore> 75 and readingScore>90").show()

sqlContext<span>("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore").dropDuplicates().show()</span>

println("We have finished here...!")

spark.stop()

}

}

The Output for the SparkSQL statements executed above are as follows:

DF.rdd.foreach(println)

println(DF.printSchema)

sqlContext.sql("SELECT * FROM Student").show()

sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore FROM Student WHERE mathScore > 75").show()

sqlContext.sql("SELECT race, count(race) FROM Student GROUP BY race").show()sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore FROM Student").filter("readingScore>90").show()

sqlContext.sql("SELECT race, parentalLevelOfEducation FROM Student").distinct.show()

sqlContext.sql("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore FROM Student WHERE mathScore> 75 and readingScore>90").show()

sqlContext("SELECT gender, race, parentalLevelOfEducation, mathScore, readingScore").dropDuplicates().show()

So, with this, we come to an end of this Spark Java Tutorial article. I hope we sparked a little light upon your knowledge about Spark, Java and Eclipse their features and the various types of operations that can be performed using them.

For details, You can even check out tools and systems used by Big Data experts and its concepts with the Masters in data engineering.

This article based on Apache Spark and Scala Certification Training is designed to prepare you for the Cloudera Hadoop and Spark Developer Certification Exam (CCA175). You will get in-depth knowledge on Apache Spark and the Spark Ecosystem, which includes Spark RDD, Spark SQL, Spark MLlib and Spark Streaming. You will get comprehensive knowledge on Scala Programming language, HDFS, Sqoop, Flume, Spark GraphX and Messaging System such as Kafka.Upskill your data engineering skills with our Microsoft fabric certification course

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co