Business Intelligence Internship Program with ...

- 3k Enrolled Learners

- Weekend/Weekday

- Live Class

Compared to large language models (LLMs), which are limited in size, speed, and ease of customization, small language models (SLMs) would be a more economical, efficient, and space-saving AI technology for users with limited resources.

With fewer parameters (usually less than 10 billion), SLMs are assumed to have lower computational and energy costs. By learning the details of smaller datasets, they better balance task-specific performance and resource efficiency.

The number of parameters in large language models (LLMs) has increased dramatically in recent years, from hundreds of millions to over a trillion in successors like GPT-4. However, the growing parameter scale raises whether larger is always better for enterprise applications.

The response increasingly points to the accuracy and effectiveness of small language models (SLMs). SLMs, which are customized for particular business domains like IT or customer support, provide focused, actionable insights and are a more sensible choice for businesses that prioritize real-world value over computational power.

Within the larger field of artificial intelligence, Small Language Models (SLMs) are a specialized subset designed for Natural Language Processing (NLP). They are distinguished by their small size and low processing power. Unlike their large language model (LLM) counterparts, SLMs are designed to efficiently perform particular language tasks with a level of specificity and efficiency.

Additionally, the focus on data security in the creation and implementation of SLM greatly increases their attractiveness to businesses, especially in terms of LLM evaluation outcomes, accuracy, safeguarding private data, and protecting sensitive information.

Since everyone is aware of what small language models are, let’s examine how they operate.

SLMs are built on top of LLMs. The transformer model is a neural network-based architecture used by small language models, just like large language models. In natural language processing (NLP), transformers have become essential components that serve as the foundation for models, such as the generative pre-trained transformer (GPT).

An outline of the transformer architecture is provided below:

Compression of the model

A leaner model is constructed from a larger one using model compression techniques. Reducing a model’s size without sacrificing much of its accuracy is known as compression. Here are a few popular methods for model compression:

Pruning

A neural network’s less important, redundant, or superfluous parameters are eliminated through pruning. The numerical weights that correspond to the connections between neurons (in this scenario, the weights will be set to 0), the neurons themselves, or the layers in a neural network are examples of parameters that are typically pruned.

To compensate for any accuracy loss, pruned models frequently require fine-tuning.

Quantization

Through quantization, high-precision data is transformed into lower-precision data. For example, 8-bit integers rather than 32-bit floating point numbers can be used to represent model weights and activation values, which are numbers between 0 and 1 assigned to the neurons in a neural network. With quantization, inferencing can be accelerated, and the computational load reduced.

Quantization can be done after training (post-training quantization, or PTQ) or during model training (quantization-aware training, or QAT). Although QAT can generate a more accurate model, PTQ doesn’t need as much processing power or training data.

Low-rank factorization

A large matrix of weights can be broken down into a smaller, lower-rank matrix using low-rank factorization. This more condensed approximation can simplify complicated matrix operations, reduce the number of computations, and produce fewer parameters.

Low-rank factorization, however, can be more challenging to apply and computationally demanding. Similar to pruning, any accuracy loss in the factorized network will need to be recovered through fine-tuning.

Knowledge distillation

Transferring the lessons learned from a trained “teacher model” to a “student model” is known as knowledge distillation. In addition to matching the teacher model’s predictions, the student model is trained to imitate the reasoning process that underlies it. Thus, the knowledge of a larger model is effectively “distilled” into a smaller one.

Next, we’ll discuss Small Language Model Examples.

Microsoft, Mistral, and Meta are the well-known companies behind small language models. With its Phi-3 models, Microsoft set the standard and demonstrated that good results can be obtained even with limited resources.

Let’s now examine a few of the most well-known small language models.

Mixtral: Customized efficiency

Using a “mixture of experts” approach, Mixtral’s models—Mistral small, Mixtral 8x7B, and Mixtral 7B—optimize their performance by utilizing only a subset of their parameters for each unique task. Because of this, they can effectively handle complicated tasks even on standard computers.

Llama 3: Enhanced text comprehension

Deeper interactions are made possible by Meta’s Llama 3, which can comprehend twice as much text as its predecessor. It is seamlessly integrated across Meta’s platforms, increasing user access to AI insights, and leverages a larger dataset to enhance its capacity to handle complex tasks.

Phi-3: Small but mighty

Microsoft’s Phi-3-mini operates with just 3.8 billion parameters. It excels at creating marketing content, running chatbots for customer service, and summarizing intricate documents. It also satisfies Microsoft’s exacting requirements for inclusivity and privacy.

DeepSeek-Coder-V2: Coding companion

DeepSeek-Coder-V2, which has strong math and coding abilities, is similar to having a second developer on your device. It’s ideal for programmers who require a dependable assistant who can function independently.

MiniCPM-Llama3-V 2.5: Versatile and multilingual

MiniCPM-Llama3-V 2.5 performs exceptionally well in optical character recognition and is capable of handling multiple languages. It provides quick, effective service and protects your privacy, making it ideal for mobile devices.

OpenELM: Private and powerful

With 270 million to 3 billion parameters, Apple’s OpenELM line of small AI models is made to be used directly on your device. They operate locally, eliminating the need for the cloud to keep your data safe and your processes efficient.

Gemma 2: Conversation expert

By striking a balance between powerful performance and small size, the Gemma 2 outperforms its predecessor. It excels at conversational AI, which makes it perfect for applications that need to process language quickly and accurately.

Optimization techniques that optimize the combined power of LLMs and SLMs have been made possible by developments in AI:

Hybrid AI pattern: When a larger corpus of data is needed to react to a prompt, a hybrid AI model can have smaller models operating on-site and accessing LLMs in the public cloud.

Intelligent routing: Intelligent routing can distribute AI workloads more effectively. A routing module that receives queries analyzes them and selects the best model to route them to can be designed. While large language models can handle more complex requests, small language models can handle simpler ones.

Let’s examine the advantages and drawbacks of small language models.

1. Customized Accuracy and Efficiency

In contrast to their larger counterparts, SLMs are intended to fulfill more specialized, frequently niche functions within an organization. This specificity makes a degree of accuracy and effectiveness that general-purpose LLMs find difficult to attain possible. A domain-specific LLM designed for the legal sector, for example, is far better equipped to handle complex legal concepts and jargon than a generic LLM, producing outputs that are more accurate and pertinent for legal professionals.

2. Economicalness

The smaller SLM model directly results in cheaper financial and computational expenses. An SLM is a feasible choice for smaller businesses or particular divisions within larger organizations because it requires significantly fewer resources to train data, deploy, and maintain. Better performance in their domains is not sacrificed for cost-effectiveness; SLMs can compete with or even outperform larger models.

3. Improved Privacy and Security

One of the main benefits of small language models is the potential for improved security and privacy. They can be implemented in private cloud environments or on-premises because they are more manageable and smaller. This lowers the possibility of data leaks and guarantees that private information stays under the organization’s control. This feature makes the small models especially attractive to sectors like finance and healthcare that handle extremely sensitive data.

4. Flexibility and Reduced Latency

For real-time applications, small language models provide an essential level of responsiveness and flexibility. Because of their smaller size, which reduces processing latency, they are perfect for AI customer support, real-time data analysis, and other applications where speed is crucial. Additionally, their flexibility makes it simpler and faster to update model training, guaranteeing the SLM’s continued efficacy.

SLMs, like LLMs, still have to deal with the dangers of AI. Businesses wishing to incorporate small language models into their internal processes or use them commercially for particular applications should take this into account.

1. Bias: Smaller models may pick up on bias from their larger counterparts, which may then show up in their results.

2. Reduced performance on complex tasks: SLMs may perform less well on complex tasks that call for knowledge of a wide range of topics because they are usually fine-tuned on specific tasks. The Phi-3 models, for instance, “perform less well on factual knowledge benchmarks because the smaller model size results in less capacity to retain facts,” according to Microsoft.9.

3. Limited generalization: Small language models may be more appropriate for specific language tasks because they lack the extensive knowledge base of their expansive counterparts.

4. Hallucinations: Validating the results is essential to ensuring that the output of SLMs is factually accurate.

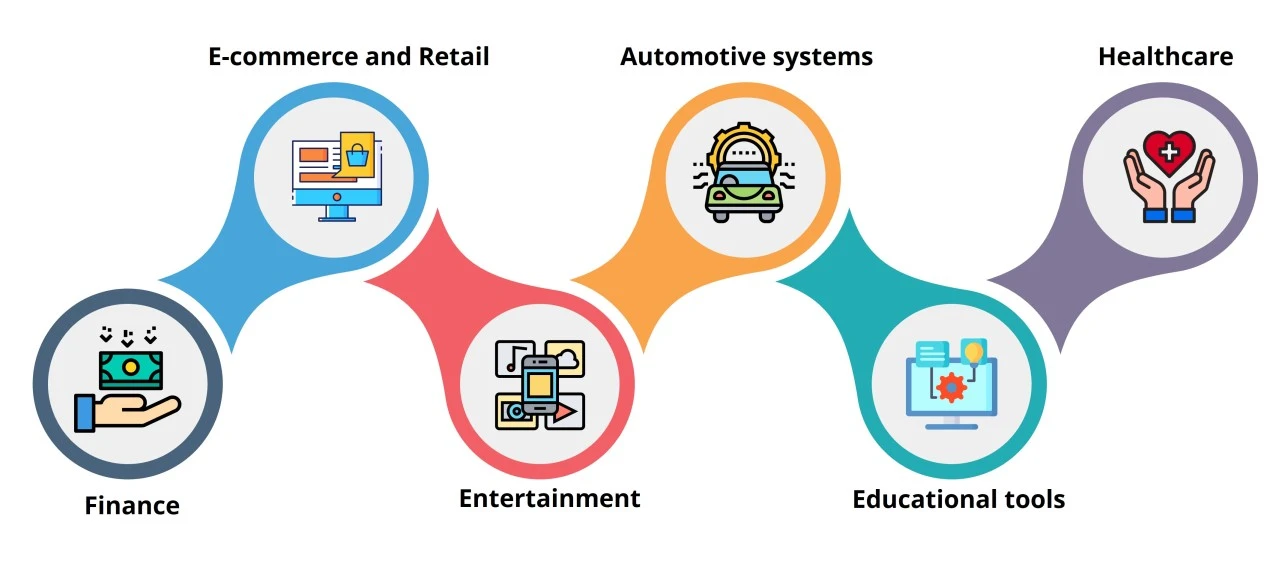

Now that you are aware of the advantages and disadvantages of small language models, we will discuss small language model use cases.

Businesses can modify SLMs to suit their unique requirements by fine-tuning them on domain-specific datasets. Because of their versatility, small language models can be used in a wide range of practical applications.

Chatbots: SLMs can power customer service chatbots, which can quickly and in real-time respond to inquiries due to their low latency and conversational AI capabilities. They can also act as the foundation for agentic AI chatbots, which can do more than respond to queries; they can also carry out tasks for users.

Summarizing content: Llama 3.2 1B and 3B models, for instance, can generate action items like calendar events and summarize conversations on a smartphone. 6. Gemini Nano is also capable of summarizing conversation transcripts and audio recordings. 11.

Generative AI: Text and software code can be completed and generated using compact models. For example, code can be generated, explained, and translated from a natural language prompt using the granite-3b-code-instruct and granite-8b-code-instruct models.

Language translation: Many small language models can translate between languages fast because they are multilingual and have been trained in languages other than English. They can create translations that are almost exact while preserving the subtleties and meaning of the source material because of their capacity to comprehend context.

Lean models are small enough to be directly deployed on local edge devices, such as sensors or Internet of Things (IoT) devices, for predictive maintenance. To anticipate maintenance requirements, manufacturers can use SLMs as tools that collect data from sensors mounted in machinery and equipment and analyze that data in real-time.

Next, we’ll examine the main distinctions between SLM and LLM.

SLM and LLM adhere to the same principles of probabilistic machine learning for their architectural design, training, data generation, and model evaluation.

Let’s now talk about the differences between SLM and LLM technologies.

Model complexity and size

The model size is the most obvious distinction between the SLM and LLM.

According to reports, LLMs like ChatGPT (GPT-4) have 1.76 trillion parameters.

Seven billion model parameters can be found in open-source SLMs like Mistral 7B.

The difference lies in the model architecture’s training procedure. While Mistral 7B employs sliding window attention to enable effective training in a decoder-only model, ChatGPT uses a self-attention mechanism in an encoder-decoder model scheme.

Knowledge of context and domain specificity

Data from particular domains is used to train SLMs. They are likely to succeed in their chosen field even though they might not have comprehensive contextual knowledge from all of the different knowledge domains.

An LLM, on the other hand, aims to resemble human intelligence more closely. After being trained on larger data sources, it is anticipated to perform well across all domains compared to a domain-specific SLM.

This implies that LLMs are also more adaptable and can be enhanced, modified, and designed for more effective downstream tasks like programming.

Consumption of resources

The process of training an LLM is resource-intensive and necessitates large-scale cloud GPU computing resources. While the Mistral 7B SLM can be run on your local computers with a good GPU, training ChatGPT from scratch necessitates several thousand GPUs. However, training a 7B parameter model still takes several computing hours across multiple GPUs.

Prejudice

LLMs are frequently biased. This is due to the fact that they are not sufficiently refined and that they are trained using publicly available, publicly published raw data. Given where that training data came from, it’s probable that it might

misrepresents or underrepresents particular groups or concepts be given the wrong label.

Other complexity arises because language itself introduces bias based on several variables, including dialect, location, and grammar rules. Another frequent problem is that the model architecture itself may unintentionally enforce a bias.

Compared to LLMs, the SLM has a naturally lower risk of bias because it trains on comparatively smaller domain-specific data sets.

Speed of inference

Thanks to its smaller model size, users can run the SLM on their local computers and still generate data in a reasonable amount of time.

An LLM needs several parallel processing units to generate data. The model inference tends to slow down with the number of concurrent users accessing an LLM.

Sentiment analysis: SLMs are adept at objectively sifting and categorizing vast amounts of text, in addition to processing and comprehending language. As a result, they can analyze text and determine its sentiment, which helps with comprehending customer feedback.

Let’s examine how small language models are used in various industries.

Small language models (SLMs) are rapidly becoming essential tools in the financial industry for efficiently managing risks and streamlining operations:

Transaction classification: SLMs speed up the precise and accurate entry process into bookkeeping systems by automating the classification of invoice line items.

Sentiment analysis: SLMs can identify subtle changes in management tone by closely examining earnings call transcripts, which offer important information for strategic decision-making.

Custom entity extraction: SLMs organize and transform unstructured bank statements into standardized data. This facilitates a more efficient financial reporting process and speeds up lending risk analysis.

2. E-commerce and retail

SLMs are changing the face of customer service in retail and e-commerce by offering quick and effective solutions:

Chatbot services: SLM-powered chatbots are quickly taking the lead in customer service due to their ability to provide prompt and precise responses, improve user interactions, and raise customer satisfaction levels.

Demand forecasting: SLMs generate precise demand forecasts by examining past sales data, industry trends, and outside variables. Making sure the right products are available at the right time allows retailers to maximize sales, minimize out-of-stock, and optimize inventory management.

3. Entertainment

The entertainment sector is experiencing radical change, and SLMs are essential to improving user engagement and reshaping creative processes.

Script generation: By producing preliminary animation drafts, SLMs aid in the creative process and increase the output’s productivity for content producers.

Dynamic dialogue: SLM creates dynamic conversation trees in open-world games based on user context, giving players a rich and engaging experience.

Content enrichment: SLMs use sophisticated language analysis to find hidden themes in movie subtitles, which enhances recommendation engines and links viewers to content that suits their interests.

Content curation: Media companies can use these SLMs to learn about audience preferences and improve their content strategies, from sentiment analysis to personalized content recommendations.

4. Automotive systems

SLMs are significantly advancing the automotive sector by enhancing user interactions and enabling intelligent navigation systems:

Navigation support: To increase overall travel efficiency, SLMs offer improved navigation support by incorporating real-time traffic updates and recommending the best routes for drivers.

Voice commands: SLM-powered in-car voice command systems allow drivers to send messages, make calls, and control music without taking their eyes off the road, making driving safer and more convenient.

5. Educational tools

SLMs are revolutionizing education by offering individualized and engaging learning opportunities.

Personalized learning: SLM-powered educational apps adjust to each student’s unique learning preferences, providing them with individualized direction and assistance at their speed.

Language learning: SLMs are excellent at language learning apps, offering users conversational and interactive practice to improve language acquisition.

6. Healthcare

SLMs are helping to improve patient care and expedite administrative duties in the healthcare industry:

Patient support: By helping with appointment scheduling, providing basic health advice, and managing administrative duties, SLMs free medical professionals to focus on more important facets of patient care.

Efficiency over Scale: SLMs depend on a small dataset, and to get the most out of the system, high-quality data from otherwise scarce training resources is essential. In this way, low-quality data can occasionally result in performance restrictions or task-specific errors.

Task-Specific Training: SLMs are frequently created for particular uses. Clear, pertinent, and carefully selected data guarantees accurate and trustworthy results that are more tailored to the specific task.

Preventing Overfitting: SLMs are more prone to overfitting when given a smaller dataset and fewer parameters. High-quality and diverse data avoid this, and generalization within the target domain is enhanced.

Let’s examine the prospects and difficulties facing small language models in the future.

Small Language Models (SLMs), which provide specialized, effective, and easily accessible solutions catered to particular needs, are revolutionizing the way industries approach artificial intelligence. SLMs offer a variety of fascinating opportunities as well as important challenges that will influence their future influence as they develop further.

Opportunities

Customization for specialized needs

SLMs are particularly good at covering specialized topics that general AI models might miss. They offer targeted language support that improves productivity and results by concentrating on particular sectors or applications. Thanks to this customization, businesses can implement AI solutions that are precisely tailored to their particular needs, which increases productivity and effectiveness.

Training and architectural advancements

Ongoing developments in multitask model architectures and training methodologies will increase SLMs’ capabilities. These developments should increase SLMs’ efficiency and versatility, allowing them to perform more complex tasks and manage a wider variety of functions.

Adoption of tailored AI

This is expected to increase more quickly as a result of SLMs’ capacity to provide particular, observable advantages to a range of industries. Widespread industry-specific AI integration may result from the adoption of SLM surpassing that of more generalized models as companies witness the financial benefits of specialized AI solutions.

Challenges

Concerns about over-reliance and ethics

Over-reliance on AI for delicate applications risks undervaluing the vital role that human judgment and supervision play. To prevent decisions that lack social or ethical considerations, it is crucial to ensure that SLMs are used responsibly and under proper human supervision.

Concept drift and data quality

SLMs rely heavily on the caliber of their training data. If the models encounter situations outside of their training scope, problems like concept drift and poor data quality can rapidly impair performance. Continuous observation and adjustment are necessary to preserve the relevance and accuracy of SLMs.

Transparency and explainability: As the number of specialized SLMs rises, it becomes harder to comprehend how they produce their outputs. Maintaining trust and accountability in AI systems requires transparency and explainability, particularly when working with individualized or sector-specific data.

The danger of malevolent use: As SLM technology spreads, worries are raised about the possibility of malevolent exploitation. To protect against these risks, strong security protocols and moral standards must be put in place to prevent SLMs from being misused.

Limited generalization and niche focus: SLMs’ capacity to generalize is constrained by their domain-specific design. To address different areas of need, organizations might need to implement multiple SLMs, which could make managing and implementing AI more difficult.

In conclusion, Samll language models have significantly influenced AI. They are practical, reasonably priced, and suitable for a wide range of business requirements without requiring supercomputers. These models work well for a variety of tasks, such as customer service, math, and even educational applications, without consuming as many resources as larger models frequently do.

Small language models will only grow in significance as technology develops. They make it easier for companies of all sizes to take advantage of AI’s advantages, opening the door for more intelligent and effective solutions in various sectors.

Next, we’ll discuss frequently asked questions in small language models.

GPT-2, DistilBERT, and ALBERT are some well-known examples of Small Language Models (SLMs).

RAG incorporates retrieval mechanisms together with model-generated responses, but SLM is a standalone model that is smaller in size compared to the other models and is optimized for any specific task.

SLMs basically try to create efficient, task-specific AI solutions with lower computation requirements and easy customization.

Two common examples of Large models are BERT and RoBERTa.

Examples of Learning Management Systems (LMS) include Moodle and Blackboard.

Our blog on Small Language Models (SLMs) wraps up here. We explored their efficiency, low-cost AI solutions, and role in revolutionizing applications like chatbots and text analysis, making AI more accessible and task-specific.

You are keen to improve your abilities and advance your professional chances in the quickly expanding field of generative artificial intelligence. If so, you ought to think about signing up for Edureka’s Generative AI Course: Masters Program. This Generative AI course covers Python, Data Science, AI, NLP, Prompt Engineering, ChatGPT, and more, with a curriculum designed by experts based on 5000+ global job trends. This extensive program is intended to provide you with up-to-date knowledge and practical experience.

Do you have any questions or need further information? Feel free to leave a comment below, and we’ll respond as soon as possible!

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co