Microsoft Azure Data Engineering Training Cou ...

- 15k Enrolled Learners

- Weekend

- Live Class

This post explains the advantages of Hadoop 2.0 and is in continuation to our previous blog post announcing the arrival of stable release of Hadoop 2.0 for production deployments.

Since then Apache has released two more releases of Hadoop 2. The most recent Release 2.4.0 of Hadoop 2 now supports Automatic Failover of the YARN ResourceManager. Because of many such enterprise ready features, Hadoop is making news and positive predictions.

This post explains the new features in detail and clarifies many prevalent doubts about Hadoop 2.0. If you are new to Hadoop, review our previous blog posts on HDFS and MapReduce and HDFS Architecture. Here this Big Data Course will explain to you more about HDFS with real-time project experience, which was well designed by Top Industry working Experts.

Following are the four main improvements in Hadoop 2.0 over Hadoop 1.x:

There are additional features such as Capacity Scheduler (Enable Multi-tenancy support in Hadoop), Data Snapshot, Support for Windows, NFS access, enabling increased Hadoop adoption in the Industry to solve Big Data problems.

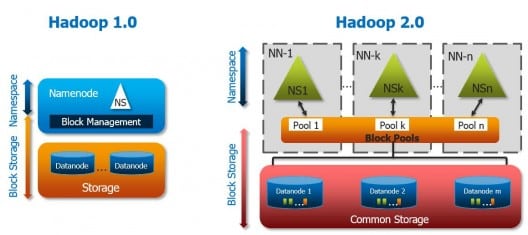

HDFS Federation

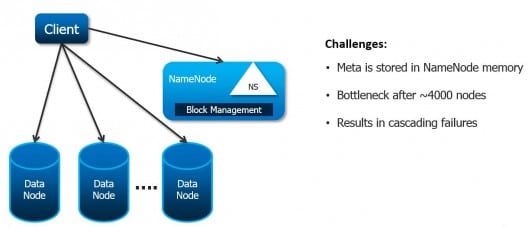

Even though a Hadoop Cluster can scale up to hundreds of DataNodes, the NameNode keeps all its metadata in memory (RAM). This results in the limitation on maximum number of files a Hadoop Cluster can store (typically 50-100M files). As your data size and cluster size grow this becomes a bottleneck as size of your cluster is limited by the NameNode memory.

Hadoop 2.0 feature HDFS Federation allows horizontal scaling for Hadoop distributed file system (HDFS). This is one of the many sought after features by enterprise class Hadoop users such as Amazon and eBay. HDFS Federation supports multiple NameNodes and namespaces.

In order to scale the name service horizontally, federation uses multiple independent Namenodes and Namespaces. The Namenodes are federated, that is, the Namenodes are independent and don’t require coordination with each other. The DataNodes are used as common storage for blocks by all the Namenodes. Each DataNode registers with all the NameNodes in the cluster. DataNodes send periodic heartbeats and block reports and handle commands from the NameNodes.

NameNode High Availability

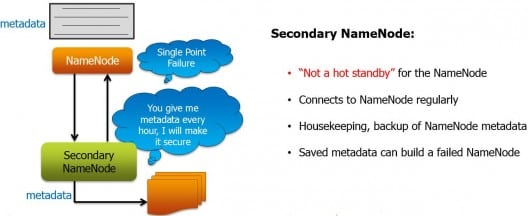

In Hadoop 1.x, NameNode was single point of failure. NameNode failure makes the Hadoop Cluster inaccessible. Usually, this is a rare occurrence because of business-critical hardware with RAS features used for NameNode servers.

In case of NameNode failure, Hadoop Administrators need to manually recover the NameNode using Secondary NameNode.

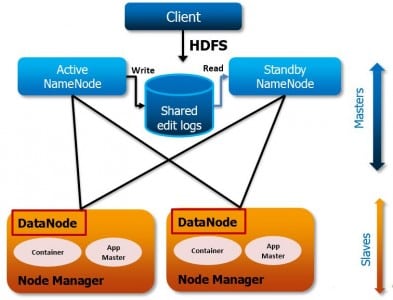

Hadoop 2.0 Architecture supports multiple NameNodes to remove this bottleneck. Hadoop 2.0, NameNode High Availability feature comes with support for a Passive Standby NameNode. These Active-Passive NameNodes are configured for automatic failover.

All namespace edits are logged to a shared NFS storage and there is only a single writer (with fencing configuration) to this shared storage at any point of time. The passive NodeNode reads from this storage and keeps an updated metadata information for cluster. In case of Active NameNode failure, the passive NameNode becomes the Active NameNode and starts writing to the shared storage. The fencing mechanism ensures that there is only one write to the shared storage at any point of time.

With Hadoop Release 2.4.0, High Availability support for Resource Manager is also available.

YARN – Yet Another Resource Negotiator

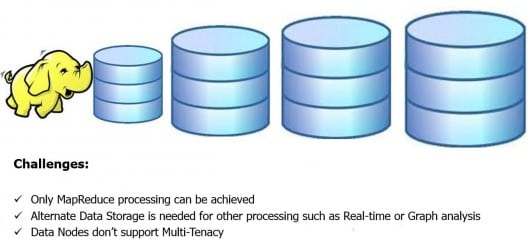

Large amount of Data from multiple stores is stored in HDFS but you can only run MapReduce framework jobs on to process and analyse the same (with Pig and Hive). To process with other framework applications such as Graph or Streaming, you need to take this data out of HDFS, for example, into Cassandra or HBase.

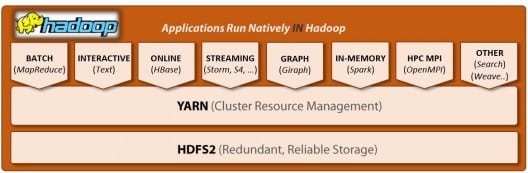

Hadoop 2.0 provides YARN API‘s to write other frameworks to run on top of HDFS. This enables running Non-MapReduce Big Data Applications on Hadoop. Spark, MPI, Giraph, and HAMA are few of the applications written or ported to run within YARN.

Image Credit: http://hortonworks.com/hadoop/yarn/

Image Credit: http://hortonworks.com/hadoop/yarn/

YARN provides the daemons and APIs necessary to develop generic distributed applications of any kind, handles and schedules resource requests (such as memory and CPU) from such applications, and supervises their execution.

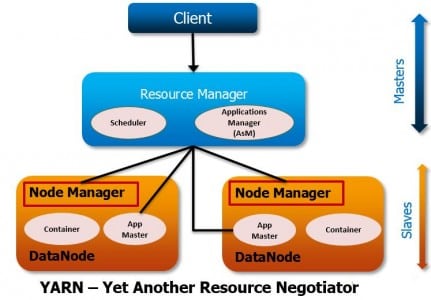

YARN – Resource Manager

In Hadoop, JobTracker is the master daemon for both Job resource management and scheduling/monitor of Jobs. In large Hadoop Cluster with thousands of Map and Reduce tasks running with TaskTackers on DataNodes, this results in CPU and Network bottlenecks.

It takes care of the entire life cycle of a Job from scheduling to successful completion –Scheduling and Monitoring. It also has to maintain resource information on each of the nodes such as number of map and reduce slots available on DataNodes – Resource management.

![]()

The Next Generation MapReduce framework (MRv2) is an application framework that runs within YARN. The new MRv2 framework divides the two major functions of the JobTracker, resource management and job scheduling/monitoring, into separate components.

The new ResourceManager manages the global assignment of compute resources to applications and the per-application ApplicationMaster manages the application’s scheduling and coordination.

YARN provides better resource management in Hadoop, resulting in improved cluster efficiency and application performance. This feature not only improves the MapReduce Data Processing but also enables Hadoop usage in other data processing applications.

YARN’s execution model is more generic than the earlier MapReduce implementation in Hadoop 1.0. YARN can run applications that do not follow the MapReduce model, unlike the original Apache Hadoop MapReduce (also called MRv1).

It is important to understand that YARN and MRv2 are two different concepts and should be used interchangeably. YARN is the resource management framework that provides infrastructure and APIs to facilitate the request for, allocation of, and scheduling of cluster resources. As explained earlier, MRv2 is an application framework that runs within YARN.

Capacity Scheduler – Multi-tenancy Support

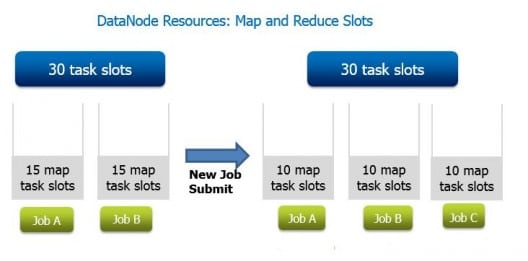

In Hadoop 1.0 all DataNodes are dedicated to Map and Reduce tasks and cannot be used for other processing. In Hadoop 1.0, the cluster’s capacity is measured in MapReduce slots. Each node in the cluster has a pre-defined set of slots, and the Scheduler ensures that a percentage of those slots are available to a set of users and groups. So if you are not running MapReduce jobs, you are wasting DataNode resources.

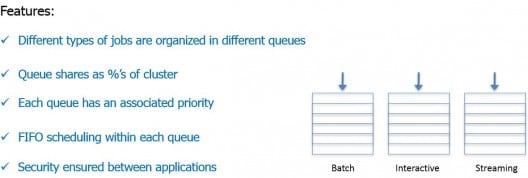

With Capacity scheduler support in Hadoop 2.0, DataNode resources can be used for other Applications too. The Capacity Scheduler (CS) ensures that groups of users and applications will get a guaranteed share of the cluster, while maximizing overall utilization of the cluster. Through an elastic resource allocation, if the cluster has available resources then users and applications can take up more of the cluster than their guaranteed minimum share.

In Hadoop 2.0 with YARN and MapReduce v2, the cluster capacity is measured as the physical resource (RAM now, and CPU as well in the future) that is available across the entire cluster.

The ResourceManager supports hierarchical application queues and those queues can be guaranteed a percentage of the cluster resources. It performs no monitoring or tracking of status for the application and works as a pure scheduler.

The ResourceManager performs its scheduling function based on the resource requirements of the applications. Each application has multiple resource request such as memory, CPU, disk, network etc. It is a significant change from the current model of fixed-type slots in Hadoop MapReduce, which leads to significant negative impact on cluster utilization.

You can check out our post on HDFS and MapReduce ,HDFS Architecture , 5 Reasons to Learn Hadoop and also How essential is Hadoop Training.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co

Do we have the Secondary Name node for each Name node in the cluster? where does the passive node fit in to HDFS Federation

Hey Gopi, thanks for checking out the blog. To answer your question, we had the concept of secondary name node in Hadoop 1. Hadoop 1 had only a single name node and a single secondary name node corresponding to that very name node. Hadoop 2 facilitated for 2 name nodes in the same cluster, one is active(Name node) and other passive (Standby). If the active namenode fails, Standby node takes care of it and becomes active.

About where the passive node fits in to HDFS Federation:

In federation there are 3 namenodes and multiple datanodes, and for each name node there will be passive node, so the cluster will have 3 namenodes, multiple datanodes and 3 passive nodes it will be a complete cluster. Hope this helps.

It is important to understand that YARN and MRv2 are two different concepts and should be used interchangeably?????????

YARN and MRv2 are two different features of Hadoop 2.0 and can not be used interchangeably.

MRv2 is the newer MapReduce written keeping YARN in mind and is available to use from Hadoop 1.0 itself. (Apache Hadoop 0.23 on wards).

YARN is the new layer in Hadoop 2.0 to manage the resources and schedule jobs. YARN is a software framework which can be used to run not only MRv2 but other applications too. MRv2 is an application framework written using YARN API and it runs within YARN.

Hope this information answers your question. Do comment for any clarifications. Thanks!

For more information you can refer to this link : https://www.edureka.co/blog/hadoop-faq/