Artificial Intelligence Certification Course

- 19k Enrolled Learners

- Weekend

- Live Class

A few days back, on MAY 13, 2024, OPEN AI came out with an announcement, unveiling their latest AI model, GPT-4o, also known as GPT-4 omni. This announcement just sent shockwaves through the tech world and captured the imagination of AI enthusiasts everywhere. Compared to the other models of OpenAI, like GPT-3.5 or GPT-4, this model stands as a pioneering AI model that can seamlessly understand and generate content across different modalities, including text, audio, and visuals.

With that, Hello everyone, and welcome to this blog on “GPT 4o Tutorial”. But before we begin, let’s check the topics that we will be covering in this blog.

GPT -4 Omni accepts user inputs in the form of text, audio, or image and can generate a combination of text, audio, or visual as an output, but the special part is its speed in generating these responses. GPT-4o can generate responses in as little as 232 milliseconds, with an average of 320 milliseconds, which is much similar to the average human response time during a conversation. This quality of GPT-4o makes it a jaw-dropping innovation in the field of Human-Computer Interaction. Literally, you are speaking to a human who is smart, intelligent, and your artificial best friend.

Now, let’s check more of GPT-4o’s capabilities with respect to human-computer interaction.

GPT 4o matches GPT-4 Turbo’s performance on English text and coding, but it really outshines GPT-4 Turbo when it comes to handling text in languages other than English.

If we check the GPT-4o model evaluation, we can see how it is better than the GPT-4 turbo in terms of multilingual, audio, and vision capabilities.

In terms of text evaluation, it reaches a score of 88.7% on O-shot COT MMLU, which basically means addressing the general knowledge questions.

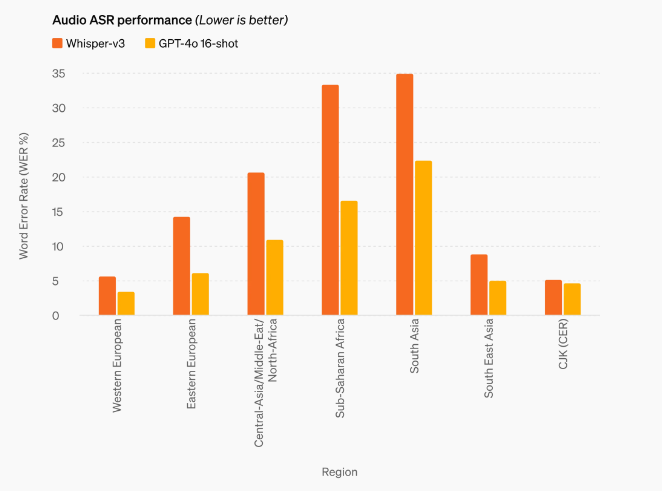

If we check the Audio ASR performance, GPT-4o significantly boosts speech recognition accuracy over Whisper-v3, performing much better with lower-resourced languages.

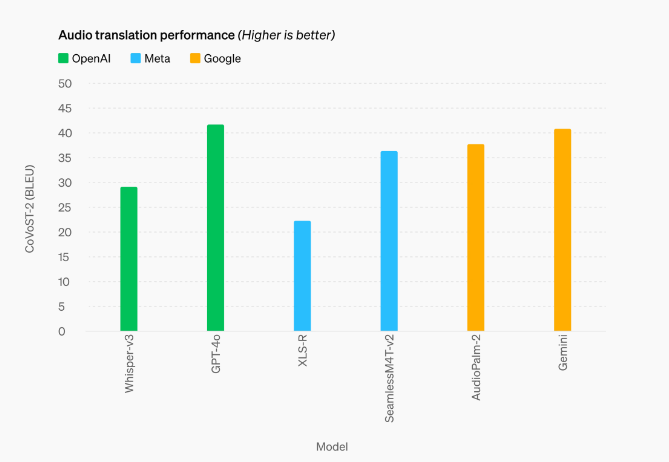

For speech translation, GPT-4o sets new benchmarks, outperforming Whisper-v3, and also google and meta models, especially on the MLS benchmark.

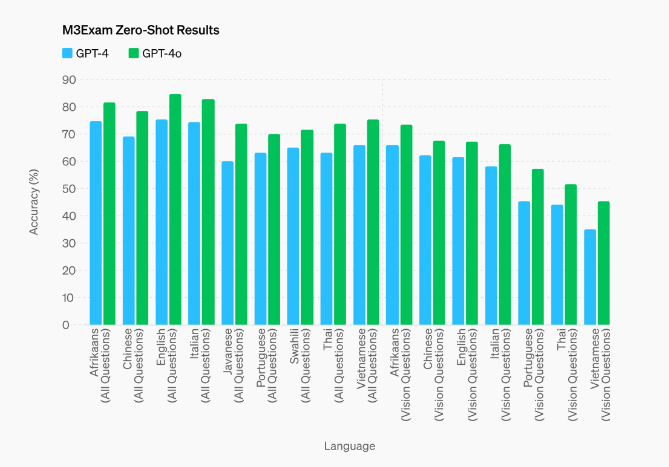

If we check M3Exam results, a standardized test for multilingualism and vision evaluation, we can see that it outperforms GPT-4 across all languages.

It also outperforms other AI models, including GPT-4 Turbo, Gemini, and Claude Opus in terms of vision understanding.

After checking all these stats, GPT-4o is especially better at Vision and audio understanding than existing models.

Now, let’s check what exactly makes it better compared to other AI models.

Previously, when you wanted to use Voice Mode to talk to ChatGPT, there was a noticeable delay of around 2.8 seconds for GPT-3.5 and 5.4 seconds for GPT-4. This was because Voice Mode used three separate models:

This process meant that GPT-4, the main intelligent model, couldn’t directly perceive things like tone, multiple speakers, background noises, laughter, singing, or emotional expressions. It only saw the plain text.

With GPT-4o, they’ve trained a single new model that can handle text, visuals, and audio all at once. This means your spoken words go straight into GPT-4o, and its responses can come out as audio, incorporating all those nuances.

Now, when it becomes accessible to the general public, this GPT-4 omni will definitely bring a new revolution to the world of AI.

It will have the Vision feature, which allows you to upload and chat about the images. You have the browsing feature where you can get real-time and up-to-date responses from the model and web. Then, it has a memory feature that remembers facts about you and uses it in future chats. Lastly, it has an advanced data analysis feature that can analyze data and create charts. Now, all of these features will be available to you in a couple of weeks. For now, you can visit their website and check their videos to explore the features of GPT-4o. Also don’t forget to check our YouTube video “GPT 4o Tutorial“.

To explore and learn more about ChatGPT, you can enroll in our ChatGPT Complete Course: Beginners to Advanced.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co