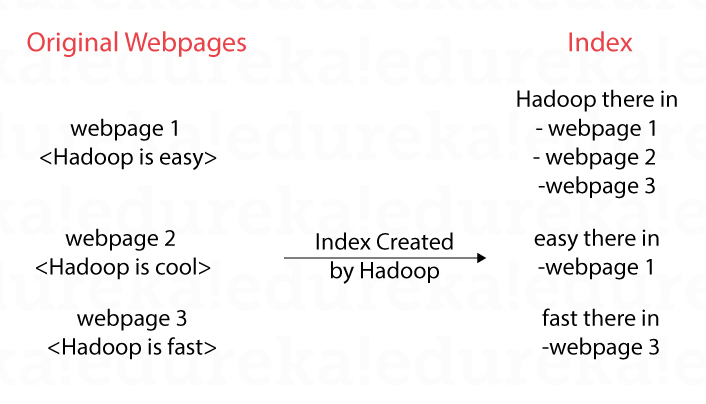

When we search for something in Google or Yahoo, we do get the response in a fraction of second. How is it possible that Google, Yahoo and other search engines return the results so fast from the ever growing web? The search engines crawl through the internet, download the webpages and create an index as shown below. For any query from us, they use the index to figure out what are all the web pages containing the text we were searching for. By looking at the below index on the right side, we can clearly know that Hadoop is there is web page 1, 2 and 3.

Then, the PageRanking algorithm is used which is based on how the pages are connected to figure out which page to show at the top and which at the bottom. In the below scenario W1 is the “most popular” because everyone is linking to it and W4 is the “least popular” as no one is linking to it. So, W1 is shown at the top and W4 at the bottom in the search results.

Then, the PageRanking algorithm is used which is based on how the pages are connected to figure out which page to show at the top and which at the bottom. In the below scenario W1 is the “most popular” because everyone is linking to it and W4 is the “least popular” as no one is linking to it. So, W1 is shown at the top and W4 at the bottom in the search results.

With the explosion of the web pages these search engines were finding challenges to create index and do the PageRanking calculations. This is where the birth of Hadoop took place in Yahoo and later became FOSS (Free and Open Source Software) under the ASF (Apache Software Foundation). Once under the ASF a lot of companies started taking interest in Hadoop and started contributing to improve it. Hadoop was the one to start the Big Data revolution, but a lot of other softwares like Spark, Hive, Pig, Sqoop, Zookeeper, HBase, Cassandra, Flume started evolving to address the limitations and gaps in Hadoop.

With the explosion of the web pages these search engines were finding challenges to create index and do the PageRanking calculations. This is where the birth of Hadoop took place in Yahoo and later became FOSS (Free and Open Source Software) under the ASF (Apache Software Foundation). Once under the ASF a lot of companies started taking interest in Hadoop and started contributing to improve it. Hadoop was the one to start the Big Data revolution, but a lot of other softwares like Spark, Hive, Pig, Sqoop, Zookeeper, HBase, Cassandra, Flume started evolving to address the limitations and gaps in Hadoop.

Web search engines were the first ones to use Hadoop, but later a lot of use-cases started to evolve as more and more data was generated. Let’s take the example of an eCommerce application used for recommending books to user. As per the below diagram, user1 bought book1, book2 and book3, user2 bought some books and so on. Looking closely, we can observe that user1 and user2 have similar taste as they have bought book1 and book2. So, book3 can be recommended to user2 and book4 can be recommended to user1. This is called Collaborative Filtering, a type of Machine Learning algorithm. We can flip the below diagram and get similar books.

In the above case we have created index, PageRanked and recommended to the user, the size of the data was small and so we were able to visualize the data and infer some results out of it. As the size of data gets bigger day-by-day and out of control, this is where Big Data tools like Hadoop come into picture.

In the above case we have created index, PageRanked and recommended to the user, the size of the data was small and so we were able to visualize the data and infer some results out of it. As the size of data gets bigger day-by-day and out of control, this is where Big Data tools like Hadoop come into picture.

Hadoop solves a lot of problems, but installing Hadoop and other Big Data software had never been an easy task. There are a lot of configuration parameters to tweak, like integration, installation and configuration issues to work with. This is where companies like Cloudera, MapR and Databricks help. They make the installing Big Data software easier and do provide commercial support, for example let’s say something happens in the production. Amazon EMR (Elastic MapReduce) takes the ease of using Hadoop etc much easier. The name Elastic MapReduce is a bit of misnomer as AWS EMR also supports other distributed computing models like Resilient Distributed Datasets and not just MapReduce.

Have a look at this article to learn how to create Hadoop cluster with ECR.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP