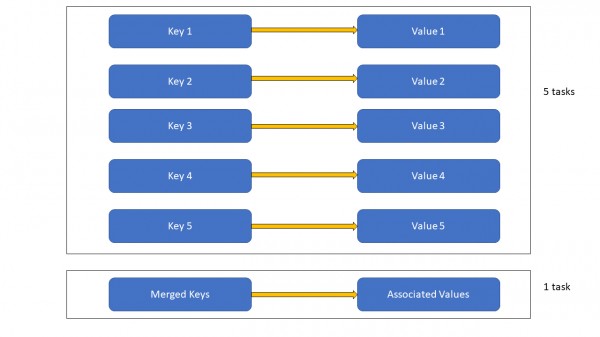

Shuffle happens with key-value pairs. So, when you merge the keys, the number of shuffles required will also decrease and hence decreases the cost. When merging less number of keys, significant change might not be observed but when you merge a large number of keys, the cost will drastically reduce.

Here, you can see how the number of tasks is reduced when the keys are merged. Hadoop only knows to handle the key-value pairs during shuffle so it wouldn't understand to transfer at once all the keys destined to the same reducer.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP