Ensuring Generative AI interpretability for compliance applications involves integrating explainable AI (XAI) techniques, rule-based constraints, and transparency mechanisms to justify decision-making.

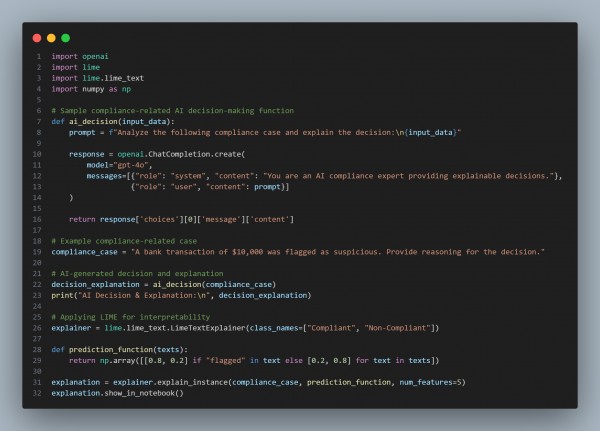

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Explainable AI (XAI) Integration – Uses methods like LIME, SHAP, and rule-based constraints to justify decisions.

- Transparency in Decision-Making – AI documents and explains why certain compliance flags are raised.

- Auditable AI Outputs – Ensures regulatory compliance by maintaining logs of AI-driven decisions.

- Bias Detection & Mitigation – Identifies potential biases in AI-generated risk assessments.

- Human-AI Collaboration – Allows manual overrides and expert validation for high-stakes decisions.

Hence, integrating explainable AI techniques with Generative AI ensures transparent, auditable, and justifiable decision-making for compliance applications, enhancing trust, accountability, and regulatory alignment.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP