To optimize memory usage while running deep learning models like GPT-3 on limited hardware, use model quantization, gradient checkpointing, and mixed precision inference to reduce memory footprint and improve efficiency.

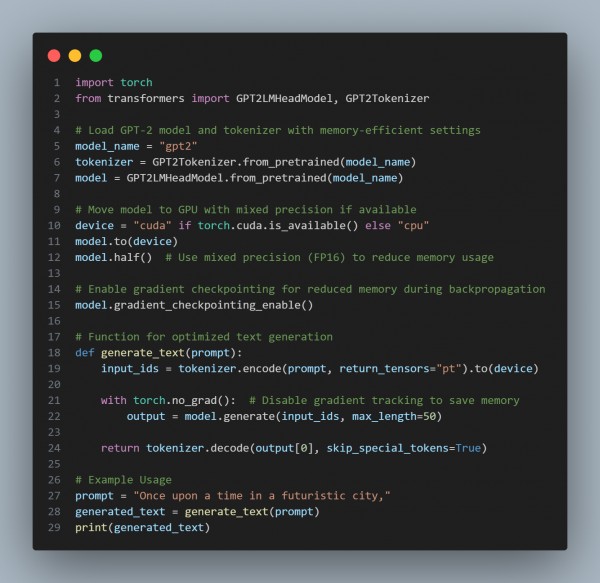

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Mixed Precision (FP16) – Uses model.half() to reduce memory consumption while maintaining performance.

- Gradient Checkpointing – Enables gradient_checkpointing_enable() to reduce memory overhead in backpropagation.

- No-Gradient Mode – Uses torch.no_grad() during inference to prevent unnecessary memory allocation.

- Efficient GPU Utilization – Moves computations to CUDA if available, ensuring faster and more efficient processing.

- Optimized Tokenization – Uses tokenized input to minimize unnecessary computation.

Hence, optimizing memory usage for GPT-3 on limited hardware can be achieved through mixed precision, gradient checkpointing, and disabling gradients during inference, ensuring efficient text generation without excessive resource consumption.

Related Post: How to optimize memory usage when deploying large generative models in production

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP