Inverse attention masking optimizes Generative AI for ambiguity resolution by suppressing irrelevant context while enhancing critical information.

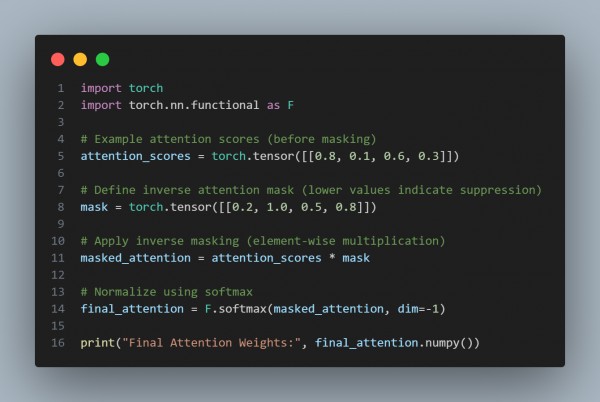

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Ambiguity Suppression: Reduces the influence of misleading tokens.

- Context Refinement: Focuses on disambiguating elements in a sequence.

- Dynamic Adaptation: Adjusts attention weights based on learned patterns.

- Improved Precision: Enhances decision-making in generative models.

Hence, by referring to above, you can optimize Generative AI for ambiguity resolution

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP