FP16 speeds up training and reduces memory usage but suffers from numerical instability and precision loss. Mixed precision training with FP32 helps mitigate these issues.

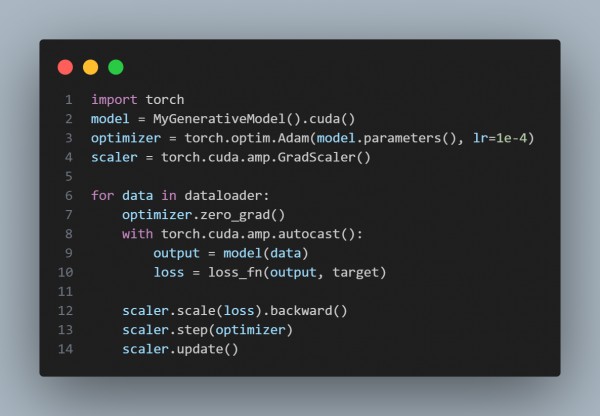

Here is the code snippet you can refer to:

In the above code, we are using the following approaches:

- Faster Training: Uses Tensor Cores for speedup.

- Lower Memory Usage: Enables larger models and batch sizes.

- Numerical Instability: Risks underflow/overflow errors.

- Mixed Precision Training: Combines FP16 and FP32 for stability.

- Gradient Scaling: Prevents small gradient values from vanishing.

Hence, by referring to the above, you can use FP16 precision for training Generative AI models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP