To prevent dataset leakage in deepfake applications with sensitive data, You can follow the following steps:

- Data Separation: Ensure that training, validation, and test datasets are strictly separated to avoid overlap.

- Anonymization: Remove personally identifiable information (PII) from the dataset.

- Strict Access Control: Limit access to sensitive data and maintain robust logging mechanisms.

- Data Auditing: Regularly audit the dataset for potential leaks and ensure compliance with privacy regulations.

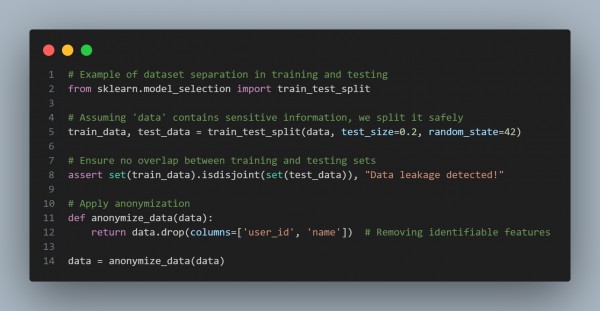

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Data Privacy: Anonymize sensitive features.

- Segmentation: Ensure strict data separation during model training and evaluation.

- Access Control: Limit access and enforce strict data management policies.

Hence, by referring to above, you can prevent dataset leakage when working with sensitive data for deepfake applications.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP