strategies to mitigate racial and gender bias in GANs generating human faces are as follows:

- Diverse Training Data: Include balanced representation of races, genders, and ethnicities.

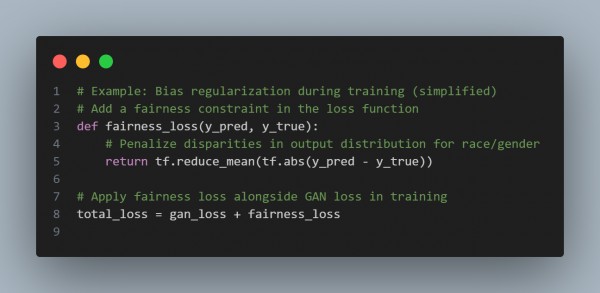

- Bias Regularization: Apply fairness constraints or adversarial training during training.

- Data Augmentation: Enhance dataset diversity with techniques like rotation and cropping.

- Bias Detection: Use fairness metrics to evaluate and adjust outputs regularly.

- Post-Processing: Filter generated faces to meet fairness criteria.

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Diverse Data: Balance the dataset across races, genders, and ethnicities.

- Bias Detection: Regularly evaluate and adjust for fairness.

- Post-Processing: Apply fairness filters to generated faces.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP