Challenges in scaling Generative AI for massive conversational datasets are as follows:

- Computational Costs: Training on large datasets requires significant computing power, memory, and storage.

- Model Overfitting: Risk of overfitting to the dataset, reducing generalization to unseen conversations.

- Data Quality: Ensuring the dataset is free of noise, bias, and redundancy is time-consuming but essential.

- Latency: Real-time conversational AI demands low-latency responses, which is challenging for large models.

- Fine-tuning: Balancing performance on specific conversational tasks with generalization across diverse topics.

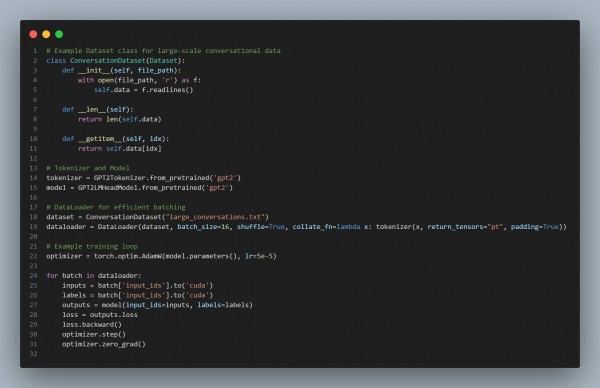

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Efficient Data Processing: Use DataLoader to handle massive datasets scalablely and efficiently.

- Resource Optimization: Batch processing reduces memory overhead during training.

- Generalization: Proper dataset preparation and regularization mitigate overfitting.

Hence, by addressing these challenges, you can scale generative AI models effectively for massive conversational datasets.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP