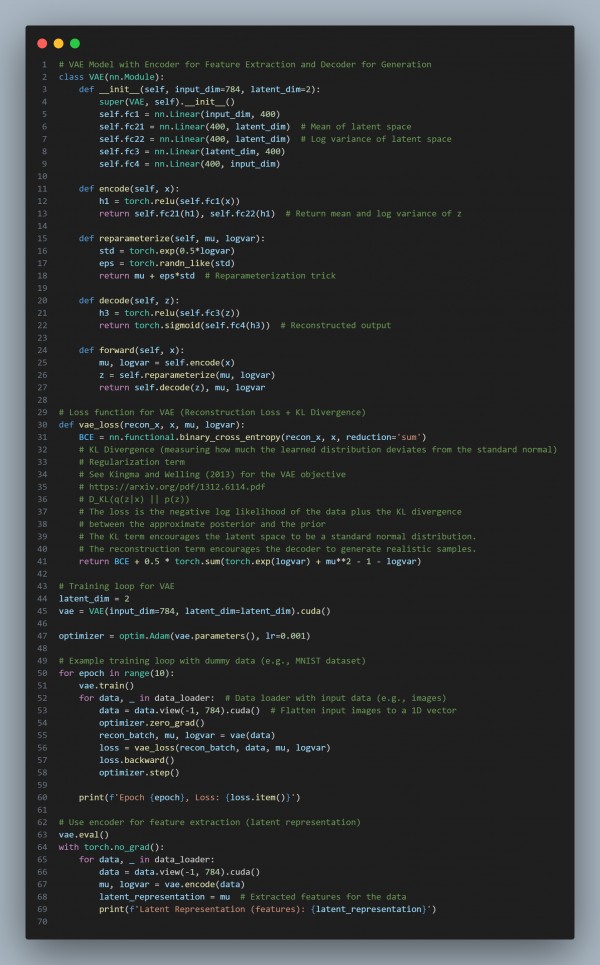

To leverage unsupervised learning in a Variational Autoencoder (VAE) for feature extraction while generating synthetic data, you can use the encoder network to learn useful latent representations of the input data. Here is the code snippet showing how:

In the above code, we are using the following key points:

- Encoder for Feature Extraction: The encoder network maps input data to a latent space that captures the underlying features of the data.

- Latent Sampling: The latent space is sampled to generate new, synthetic data that shares similar features to the original data.

- Decoder for Data Generation: The decoder reconstructs data from the latent representations, ensuring that the generated data is realistic.

- Unsupervised Learning: The VAE is trained in an unsupervised manner, using reconstruction loss and KL divergence, allowing it to learn meaningful features without labeled data.

Hence, by referring to the above, you can leverage unsupervised learning in VAE for feature extraction while generating synthetic data.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP