To add gradient penalty regularization to Julia-based generative models, you can use the gradient penalty (GP) as a regularizer, commonly used in Wasserstein GANs (WGAN-GP).

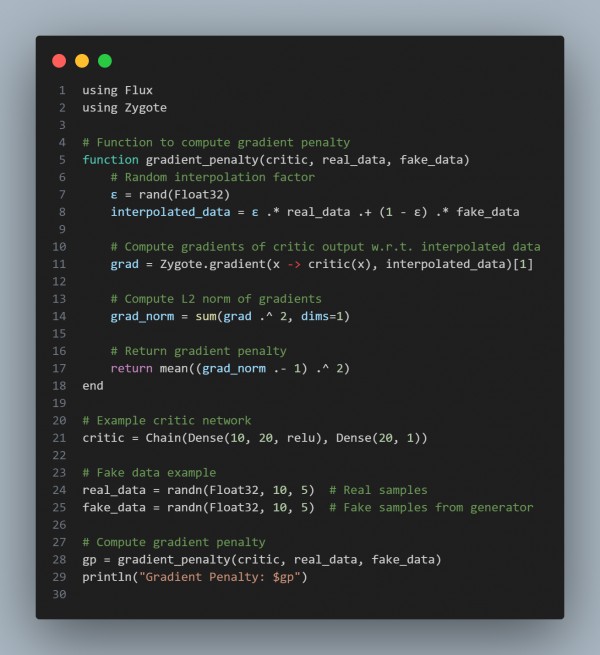

Here is the code snippet you can refer to:

In the above code, we are using the following:

- Interpolated Data: Combines real and fake data with a random interpolation factor.

- Gradient Computation: Uses Zygote to calculate the gradient of the critic output with respect to the interpolated data.

- Gradient Penalty: Ensures the norm of the gradient is close to 1 for stability during GAN training.

Hence, by referring to the above, you can add gradient penalty regularization to Julia-based generative models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP