To implement style embeddings in a generative model for transferring artistic styles, you can encode the style image into a latent embedding and condition the generator on it. This approach is common in models like StyleGAN or Neural Style Transfer variants.

Here are the steps you can follow:

- Define the Style Encoder: Use a CNN to extract style embeddings from style images.

- Condition the Generator on Style Embeddings: Modify the generator to accept style embeddings.

- Training Loop: Train the style encoder and generator together.

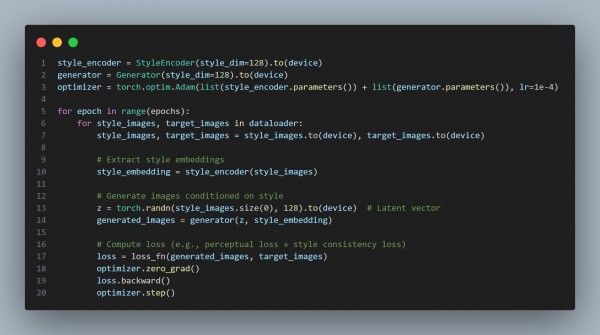

Here is the code snippet you can refer to:

In the above code, we are using:

- The StyleEncoder extracts style-specific features from a style image.

- The Generator uses both latent noise and style embedding to synthesize styled images.

- Loss functions like perceptual loss or Gram matrix loss can ensure style and content consistency.

Hence, by referring to the above, you can implement style embeddings in a Generative model to transfer artistic styles to new images.

Related Post: How to implement style transfer in generative models using Python libraries

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP