Agentic AI Certification Training Course

- 126k Enrolled Learners

- Weekend/Weekday

- Live Class

LangChain is a dynamic framework designed to supercharge the potential of Large Language Models (LLMs) by seamlessly integrating them with tools, APIs, and memory. It empowers developers to craft intelligent and context-aware applications, from conversational AI to workflow automation. With its modular design and versatile capabilities, LangChain transforms static LLMs into powerful engines for innovation. Whether you’re building for creativity, analytics, or automation, LangChain is your key to unlocking AI’s full potential.

So without any further ado lets start with our first step what is langchain.

Large language models (LLMs) are used to run LangChain, an open-source framework for building apps. It gives you the tools and ideas you need to connect LLMs to outside data sources, handle processes with multiple steps, and improve features by using memory, chains, and agents. A lot of people use LangChain to do things like chatbots, answering questions, analyzing documents, and automating logic.

Now that we know what is langchain lets us see why is langchain important.

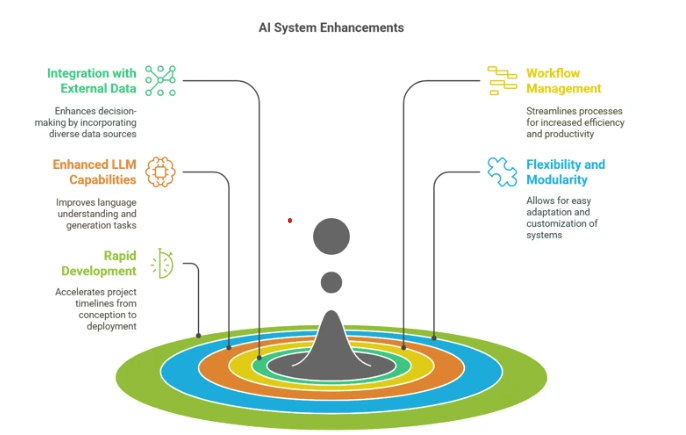

LangChain is important because it makes it easier to create complex apps that use big language models (LLMs). Some important reasons are:

2. Workflow Management: It allows processes and chains with more than one step, which makes it easier to set up complex workflows like sequential reasoning, decision-making, and dialogues with more than one turn.

3. Enhanced LLM Capabilities: Memory and agents make it possible for LLMs to be aware of their surroundings and respond in real time, which makes them more useful and interactive.

4. Flexibility and Modularity: The modular design of LangChain lets coders change how parts work, connect them to other systems, and try out different setups.

5. Rapid Development: LangChain speeds up the development of complex AI apps by giving developers pre-built tools and concepts. This cuts down on the time and work needed to make the apps.

Since you have understood the importance of langchain let us see langchain integration.

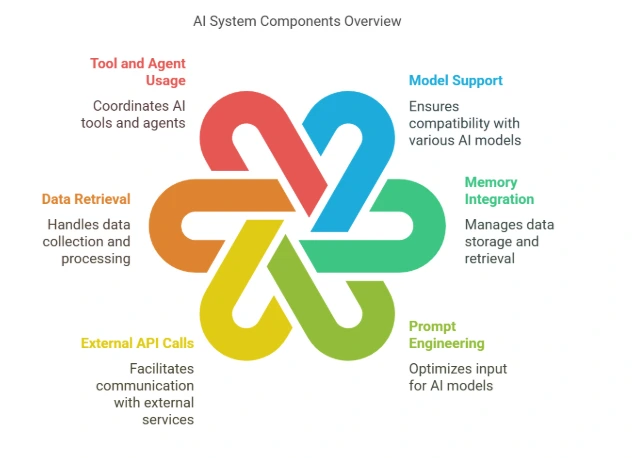

LangChain makes it easy for Large Language Models (LLMs) to work better in a wide range of situations by integrating with them seamlessly. Important points for integration are:

1. Model Support

1. Model SupportBecause of these additions, LangChain is a strong framework for expanding the functions of LLMs in real-life situations.

Now that we have a clear understanding of langchain let us explore how langchian works.

LangChain works by giving developers a system that lets them make apps that use large language models (LLMs) and have extra features like memory, access to external data, and workflows with multiple steps. To give you an idea of how it works:

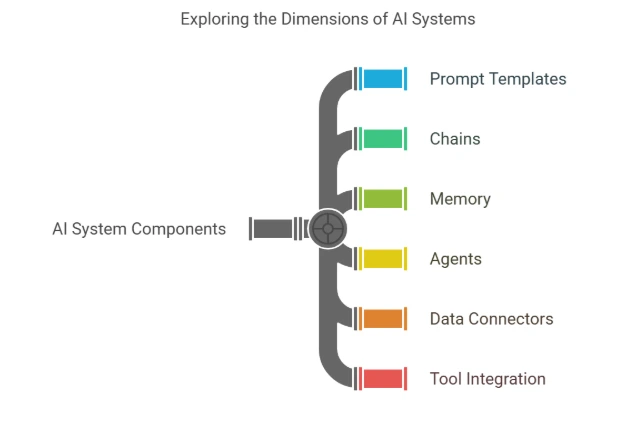

Core Components of LangChain

Core Components of LangChainAs you can see here is the workflow of langchain:

LangChain essentially bridges the gap between raw LLM capabilities and real-world application needs, making it a versatile tool for leveraging generative AI effectively.

Lets us now deep dive into benefits of langchain.

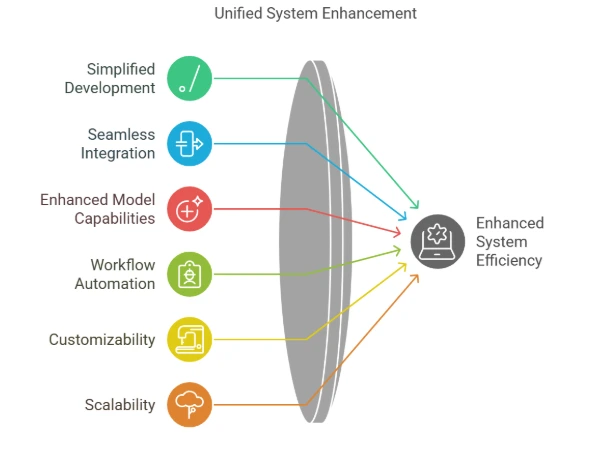

Many useful features make LangChain an important tool for developers who need to make apps that use large language models (LLMs). Here are some of its main benefits:

Simplified Development

Simplified Developmentnow that you know benefits of langchain lets explaore the features of langchain.

LangChain has many features that it can use to make a wide range of powerful LLM-based apps. Features of langchain are as follows:

Since we already saw langchain integrations lets us deep dive in it.

LangChain works with many different systems, tools, and services to make large language models (LLMs) more useful in real-life situations. With these integrations, LangChain can easily get data, run jobs, and talk to other systems.

Let us now explore how to create prompts in langchain.

LangChain offers tools for creating, managing, and optimizing prompts to interact effectively with LLMs. Here’s a quick guide to creating prompts:

You can make dynamic questions with placeholders for input variables using the PromptTemplate class.

from langchain.prompts import PromptTemplate

# Create a prompt template

template = """

You are an expert in {domain}. Answer the question concisely:

Question: {question}

Answer:"""

prompt = PromptTemplate(

input_variables=["domain", "question"], # Define placeholders

template=template

)

# Format the prompt with specific inputs

formatted_prompt = prompt.format(domain="Physics", question="What is Newton's second law?")

print(formatted_prompt)

You can chain or combine multiple prompts for complex workflows.

from langchain.prompts import PromptTemplate

# Define individual templates

intro_prompt = PromptTemplate(

input_variables=["topic"],

template="Provide a brief introduction to {topic}."

)

detailed_prompt = PromptTemplate(

input_variables=["details"],

template="Explain the details: {details}"

)

# Use formatted prompts together

formatted_intro = intro_prompt.format(topic="Quantum Mechanics")

formatted_details = detailed_prompt.format(details="wave-particle duality")

print(formatted_intro)

print(formatted_details)

For better model guidance, you can include examples in your prompt.

from langchain.prompts import FewShotPromptTemplate

# Define examples

examples = [

{"input": "What is AI?", "output": "AI stands for Artificial Intelligence."},

{"input": "Define ML.", "output": "ML stands for Machine Learning."},

]

# Create example format template

example_formatter = PromptTemplate(

input_variables=["input", "output"],

template="Q: {input}nA: {output}"

)

# Few-shot prompt

few_shot_prompt = FewShotPromptTemplate(

examples=examples,

example_prompt=example_formatter,

prefix="Answer the following questions:",

suffix="Q: {new_question}nA:",

input_variables=["new_question"],

)

# Format the prompt

formatted_few_shot_prompt = few_shot_prompt.format(new_question="What is Deep Learning?")

print(formatted_few_shot_prompt)

For models like OpenAI’s GPT-4, you can define chat-specific prompts.

from langchain.prompts import ChatPromptTemplate, SystemMessagePromptTemplate, HumanMessagePromptTemplate

# Define chat-specific message types

system_message = SystemMessagePromptTemplate.from_template("You are a helpful assistant.")

human_message = HumanMessagePromptTemplate.from_template("Can you explain {concept}?")

# Create a chat prompt template

chat_prompt = ChatPromptTemplate.from_messages([system_message, human_message])

# Format the chat prompt

formatted_chat_prompt = chat_prompt.format_messages(concept="Machine Learning")

for message in formatted_chat_prompt:

print(f"{message.type}: {message.content}")

LangChain’s prompt creation tools simplify the process of designing effective and reusable prompts for generative AI applications.

Now that you have a clear understanding of langchain lets us see how to develop applications in langchain.

By combining different parts like LLMs, prompt management, chains, memory, and external tools, LangChain gives you a way to make powerful and scalable apps. Here is a step-by-step guide on how to use LangChain to create apps.

Make sure you have all of LangChain’s requirements installed before you start.

pip install langchain openai

You may also need to add extra libraries if you want to use certain interfaces, like those for vector databases or APIs.

LangChain is made up of different parts, like memory, agents, prompts, and chains. Feel free to break up your application into these parts.

Use PromptTemplate to dynamically generate prompts based on user input.

from langchain.prompts import PromptTemplate

template = "Translate the following text to French: {text}"

prompt = PromptTemplate(input_variables=["text"], template=template)

formatted_prompt = prompt.format(text="Hello, how are you?")

print(formatted_prompt)

Chains enable you to define workflows by combining multiple steps (e.g., prompt generation, querying models, postprocessing).

from langchain.chains import LLMChain

from langchain.llms import OpenAI

# Define LLM (e.g., OpenAI GPT-3 model)

llm = OpenAI(model="text-davinci-003")

# Use LLMChain to combine LLM with a prompt

llm_chain = LLMChain(prompt=prompt, llm=llm)

# Run the chain

result = llm_chain.run("Translate 'How are you?'")

print(result)

Use memory to store context across interactions, like in chatbots. LangChain supports short-term and long-term memory.

from langchain.memory import ConversationBufferMemory from langchain.chains import ConversationChain # Initialize memory memory = ConversationBufferMemory() # Create conversation chain with memory conversation_chain = ConversationChain(llm=llm, memory=memory) # Simulate a conversation response_1 = conversation_chain.predict(input="Hello, how are you?") response_2 = conversation_chain.predict(input="What is my previous question?") print(response_1) print(response_2)

Agents allow LangChain to make decisions about which tools to use (e.g., APIs, databases, web scraping, etc.).

from langchain.agents import initialize_agent, Tool, AgentType

# Define tools (e.g., calculator, weather API)

tools = [

Tool(

name="Calculator",

func=lambda x: eval(x),

description="Performs basic arithmetic operations"

)

]

# Initialize agent

agent = initialize_agent(tools, llm, agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

# Execute agent with a task

agent_response = agent.run("What is 2 + 2?")

print(agent_response)

LangChain can connect to outside tools, like databases, APIs, and document loaders, so it can get data in real time.

from langchain.document_loaders import WebBaseLoader

# Load documents from a web page

loader = WebBaseLoader("https://www.example.com")

documents = loader.load()

# Process documents with a language model

llm_chain.run(documents)

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

# Initialize vector store with embeddings

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(documents, embeddings)

# Query the vector store

query_result = vectorstore.similarity_search("What is AI?")

print(query_result)

Define the application code after you’ve added the prompts, chains, agents, and tools.

from langchain.llms import OpenAI

from langchain.chains import ConversationalRetrievalChain

from langchain.vectorstores import FAISS

from langchain.document_loaders import TextLoader

from langchain.embeddings import OpenAIEmbeddings

# Load a document and create a vector store

loader = TextLoader("path_to_document.txt")

documents = loader.load()

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(documents, embeddings)

# Initialize LLM and retrieval chain

llm = OpenAI(model="text-davinci-003")

qa_chain = ConversationalRetrievalChain.from_llm(llm, vectorstore)

# Simulate a conversation

response = qa_chain.run("What is the main topic of this document?")

print(response)

LangChain is versatile and can be deployed in various environments (e.g., web apps, APIs, chatbots).

You can integrate LangChain into a web framework to serve responses.

from fastapi import FastAPI

from langchain.chains import LLMChain

from langchain.llms import OpenAI

app = FastAPI()

# Initialize the LLM and chain

llm = OpenAI(model="text-davinci-003")

llm_chain = LLMChain(prompt=prompt, llm=llm)

@app.get("/generate")

def generate_response(input_text: str):

return llm_chain.run(input_text)

Importing LLMs (Language Models)LangChain’s modular components can also be packaged into serverless functions for highly scalable applications.

LangChain Tools: Extending LLM Functionality

While large language models (LLMs) are exceptional at generating and understanding text, they have inherent limitations—they cannot access real-time data, perform arithmetic, or interact with external systems on their own.

LangChain Tools provide a structured way to bridge this gap by allowing LLMs to interact with external functions, services, and APIs. These tools enable your language model to go beyond generating text and actually perform actions.

What Are LangChain Tools?

Tools in LangChain are wrappers around functions that an Agent can call during task execution. They are designed to extend the capabilities of an LLM-based system so that it can:

Each tool includes:

Here’s a simple example of creating a tool that multiplies two numbers.

from langchain.agents import Tool

# Define a custom function

def multiply_numbers(input: str) -> str:

numbers = list(map(int, input.split(',')))

return str(numbers[0] * numbers[1])

# Wrap it as a LangChain tool

multiply_tool = Tool(

name="MultiplyTwoNumbers",

func=multiply_numbers,

description="Multiplies two comma-separated numbers. Example input: '3,4'"

)

This tool can now be used by an agent when it determines multiplication is required for a task.

Tools are especially powerful when combined with LangChain Agents, which decide which tool to use based on the task.

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

tools = [multiply_tool]

agent = initialize_agent(tools=tools, llm=llm, agent="zero-shot-react-description")

response = agent.run("What is the result of multiplying 8 and 12?")

print(response)

In this example, the agent will interpret the request, choose the MultiplyTwoNumbers tool, execute it, and return the result.

| Feature | LangChain | LangSmith |

|---|---|---|

| Purpose | Framework for building LLM-powered applications | Tool for monitoring and debugging LLMs |

| Main Focus | Integration of LLMs, prompt management, chains, agents, and memory | Providing observability and debugging capabilities for LLM workflows |

| Use Cases | Building chatbots, content generation, text-to-image tasks, etc. | Tracking, debugging, and improving LLM outputs in real-time |

| Key Features | Chains, agents, external tool integrations, memory, document loaders | Real-time logging, debugging, error tracking, and performance insights |

| Target Audience | Developers building LLM-driven applications | Developers looking to optimize and debug LLM workflows |

| Integration with LLMs | Yes, integrates LLMs for various use cases | Yes, integrates with LLMs to monitor and optimize their performance |

| Feature | LangChain | LangGraph |

|---|---|---|

| Purpose | Framework for building LLM-powered applications | Tool for managing and visualizing LLM workflows as graph structures |

| Main Focus | Integration of LLMs, prompt management, chains, agents, and memory | Graph-based representation of workflows, aiding in visualizing and managing complex interactions between components |

| Use Cases | Building chatbots, content generation, text-to-image tasks, etc. | Visualizing and organizing complex LLM processes and multi-step workflows |

| Key Features | Chains, agents, external tool integrations, memory, document loaders | Graph visualization, workflow management, optimizing and monitoring LLM-based tasks |

| Target Audience | Developers building LLM-driven applications | Developers needing to map and optimize workflows for complex LLM applications |

| Integration with LLMs | Yes, integrates LLMs for various use cases | Yes, integrates LLMs and visualizes their processes as graph structures |

To see how to add language models to LangChain, look at this example:

Many LLM providers, like OpenAI, Hugging Face, and others, can be connected to LangChain. Here’s an example of how to bring in an OpenAI LLM.

from langchain.llms import OpenAI # Initialize the OpenAI LLM with your API key llm = OpenAI(openai_api_key="your-api-key")

from langchain.llms import HuggingFace # Initialize the HuggingFace LLM with the model name llm = HuggingFace(model_name="distilgpt2")

You can use the LLM in a prompt or chain after loading it. Here’s an example of how to use the supplied LLM to make text:

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Define a prompt template

prompt = PromptTemplate(input_variables=["topic"], template="Write a blog post about {topic}")

# Create an LLMChain with the LLM and the prompt

chain = LLMChain(llm=llm, prompt=prompt)

# Run the chain with a specific input

output = chain.run(topic="Artificial Intelligence in 2025")

print(output)

This method can be changed to work with different LLM interfaces in LangChain. It’s as easy as setting up your setup and installing the dependencies you need for the language model provider you want to use.

LangSmith is a program that keeps an eye on and fixes problems in Large Language Model (LLM) processes. It is especially helpful for coders who want to watch, track, and improve the performance of LLMs in real-time, making sure that the outputs match the behavior and quality that was wanted.

LangSmith works with LangChain to give developers the tools they need to test and improve their generative AI apps, which leads to better performance in all LLM-based processes.

LangChain is a system that makes it easier to make apps that use Large Language Models (LLMs). To get started with LangChain, follow these steps:

First, install the LangChain library using pip:

pip install langchain

It’s also possible that you will need to load certain libraries for the LLM you want to use, like OpenAI or Hugging Face.

LangChain works with a number of LLM companies. If you want to use OpenAI, for example, you need to install the openai app and set your API key:

pip install openai

Then, set the API key in your environment:

export OPENAI_API_KEY="your-api-key"

Here’s an example of using LangChain to interact with an LLM:

from langchain.llms import OpenAI from langchain.prompts import PromptTemplate from langchain.chains import LLMChain

llm = OpenAI(openai_api_key="your-api-key", temperature=0.7)

prompt = PromptTemplate(

input_variables=["topic"],

template="Write a detailed article about {topic}."

)

chain = LLMChain(llm=llm, prompt=prompt) output = chain.run(topic="Artificial Intelligence") print(output)

LangChain offers a number of important features that can make your LLM-powered apps better:

from langchain.chains import SimpleSequentialChain

# Define the first chain

chain1 = LLMChain(

llm=llm,

prompt=PromptTemplate(input_variables=["question"], template="What are the benefits of {question}?")

)

# Define the second chain

chain2 = LLMChain(

llm=llm,

prompt=PromptTemplate(input_variables=["answer"], template="Elaborate on the following: {answer}")

)

# Combine the chains

sequential_chain = SimpleSequentialChain(chains=[chain1, chain2])

# Run the combined chain

output = sequential_chain.run("using AI in healthcare")

print(output)

LangChain has a lot of material and tutorials that can help you learn how to use its more advanced features:

With LangChain, you can quickly build:

LangChain is a great choice for making scalable and smart LLM-driven apps because it is so flexible.

The LangChain system makes it easy to create apps that use Large Language Models (LLMs). You can use it in the following ways:

LangChain’s flexibility and integration options make these use cases practical and impactful across industries.

The LangChain system is very strong and helps connect Large Language Models (LLMs) with real-world uses in many different areas. Its flexible structure, wide range of built-in features, and adaptability let developers create strong systems for AI that can have conversations, making content, analyzing data, and more. LangChain makes it easy for businesses and students to use the full potential of generative AI by giving them tools for managing memory, quick engineering, and seamless API connectivity. LangChain is one of the most useful and important tools in the AI environment. It can be used to scale workflows, boost creativity, or give each user a personalized experience.

Generative AI creates text, images, and more by learning from data patterns. A Generative AI certification helps professionals develop skills in training AI models for automation, creativity, and real-world applications in various industries.

1. Is LangChain a library or framework?

A system called LangChain is made to make it easier to create apps that use Large Language Models (LLMs). It lets you make conversational agents, automate workflows, and more with its modular parts.

2. Does ChatGPT use LangChain?

ChatGPT does not directly use LangChain. However, developers can add ChatGPT or other LLMs to LangChain-based apps to make them more useful.

3. What is the difference between LLM and LangChain?

4. Is LangChain API free?

You can use LangChain for free because it is open-source. However, adding external APIs (like OpenAI or Pinecone) might cost money, depending on how much those services cost.

5. Which is better, framework or library?

It depends on the use case:

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co