Agentic AI Certification Training Course

- 124k Enrolled Learners

- Weekend/Weekday

- Live Class

This comparison blog on PyTorch v/s TensorFlow is intended to be useful for anyone considering starting a new project, making the switch from one Deep Learning framework or learning about the top 2 frameworks! The focus is basically on programmability and flexibility when setting up the components of the training and deployment of the Deep Learning stack.

Let’s look at the factors we will be using for the comparison:

So let the battle begin!

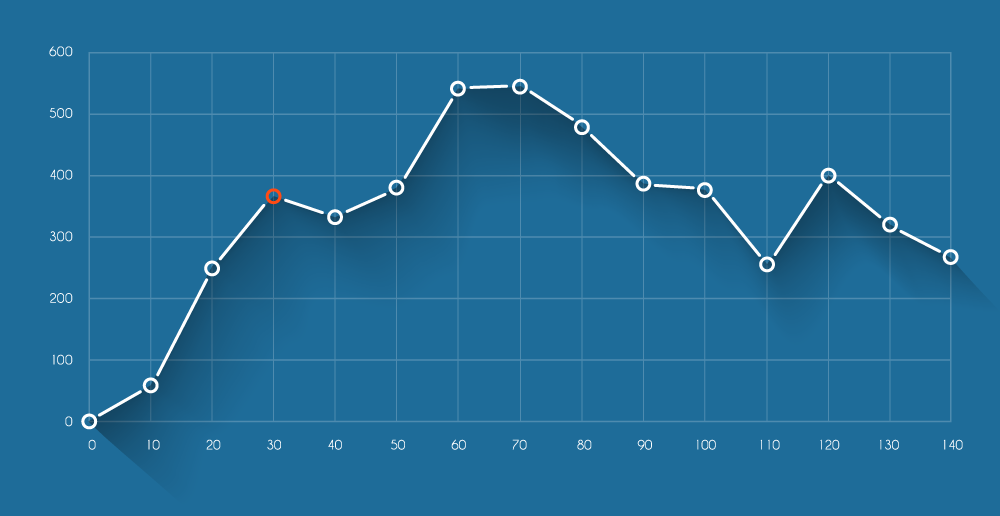

I will start this PyTorch vs TensorFlow blog by comparing both the frameworks on the basis of Ramp-Up Time.

Since something as simple at NumPy is the pre-requisite, this make PyTorch very easy to learn and grasp.

With Tensorflow, the major thing as we all know it is that the graph is compiled first and then we have the actual graph output.

So where is the dynamism here? Also, TensorFlow has the dependency where the compiled code is run using the TensorFlow Execution Engine. Well, for me, the lesser dependencies the better overall.

Back to PyTorch, the code is well known to execute at lightning fast speeds and turns out to be very efficient overall and here you will not require extra concepts to learn.

With TensorFlow, we need concepts such as Variable scoping, placeholders and sessions. This also leads to more boilerplate code, which I’m sure none of the programmers here like.

So in my opinion, PyTorch wins this one!

Beginning with PyTorch, the clear advantage is the dynamic nature of the entire process of creating a graph.

Beginning with PyTorch, the clear advantage is the dynamic nature of the entire process of creating a graph.

The graphs can be built up by interpreting the line of code that corresponds to that particular aspect of the graph.

So this is entirely built on run-time and I like it a lot for this.

With TensorFlow, the construction is static and the graphs need to go through compilation and then running on the execution engine that I previously mentioned.

PyTorch code makes our lives that much easier because pdb can be used. Again, by making use of the standard python debugger we will not require learning to make use of another debugger from scratch.

Well with TensorFlow, you need to put in the little extra effort. There are 2 options to debug:

Well, PyTorch wins this one as well!

Well certain operations like:

1. Flipping a tensor along a dimension

2. Checking a tensor for NaN and infinity

3. Fast Fourier transforms supported

are supported by TensorFlow natively.

We also have the contrib package that we can use for the creation of more models.

This allows support for the use of higher-level functionality and gives you a wide spectrum of options to work with.

Well with PyTorch, as of now it has fewer features implemented but I am sure the gap will be bridged real soon due to all the attention PyTorch is attracting.

However, it is not as popular as TensorFlow among freelancers and learners. Well, this is subjective but it is what it is guys!

TensorFlow nailed it in this round!

Well, its no surprise that saving and loading models is fairly simple with both the frameworks.

Well, its no surprise that saving and loading models is fairly simple with both the frameworks.

PyTorch has a simple API. The API can either save all the weights of a model or pickle the entire class if you may.

However, the major advantage of TensorFlow is that the entire graph can be saved as a protocol buffer and yes this includes parameters and operations as well.

The Graph then can be loaded in other supported languages such as C++ or Java based on the requirement.

This is critical for deployment stacks where Python is not an option. Also this can be useful when you change the model source code but want to be able to run old models.

Well it is as clear as day, TensorFlow got this one!

For small-scale server-side deployments both frameworks are easy to wrap in e.g. a Flask web server.

For mobile and embedded deployments, TensorFlow works really well. This is more than what can be said of most other deep learning frameworks including PyTorch.

Deploying to Android or iOS does require a non-trivial amount of work in TensorFlow.

You don’t have to rewrite the entire inference portion of your model in Java or C++.

Other than performance, one of the noticeable features of TensorFlow Serving is that models can be hot-swapped easily without bringing the service down.

I think I will give it to TensorFlow for this round as well!

Well, its needless to say that I have found everything I need in the official documentation of both the frameworks.

The Python APIs are well documented and there are enough examples and tutorials to learn either framework.

But one tiny thing that grabbed my attention is that the PyTorch C library is mostly undocumented.

However, this only matters when writing a custom C extension and perhaps if contributing to the software overall.

To sum it up, I can say we’re stuck up with a tie here guys!

However if you think you lean towards something, head down to the comments section and express your views. Let’s engage there!

Device management in TensorFlow is a breeze – You don’t have to specify anything since the defaults are set well.

Device management in TensorFlow is a breeze – You don’t have to specify anything since the defaults are set well.

For example, TensorFlow automatically assumes you want to run on the GPU, if one is available.

In PyTorch, you must explicitly move everything onto the device even if CUDA is enabled.

The only downside with TensorFlow device management is that by default it consumes all the memory on all available GPUs even if only one is being used.

With PyTorch, I’ve found that the code needs more frequent checks for CUDA availability and more explicit device management. This is especially the case when writing code that should be able to run on both the CPU and GPU.

An easy win for TensorFlow here!

![]() Moving on, last but not the least I have picked out custom extensions for you guys.

Moving on, last but not the least I have picked out custom extensions for you guys.

Building or binding custom extensions written in C, C++ or CUDA is doable with both frameworks.

TensorFlow requires more boilerplate code though is arguably cleaner for supporting multiple types and devices.

In PyTorch however, you simply write an interface and corresponding implementation for each of the CPU and GPU versions.

Compiling the extension is also straight-forward with both frameworks and doesn’t require downloading any headers or source code outside of what’s included with the pip installation.

And PyTorch has the upper hand for this!

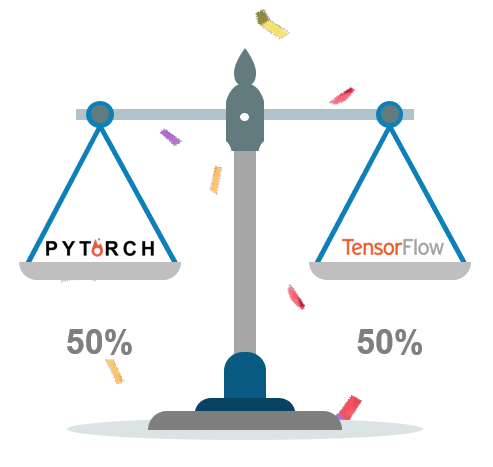

Well, to be optimistic, I would say PyTorch and TensorFlow are similar and I would leave it at a tie.

But, in my personal opinion, I would prefer PyTorch over TensorFlow (in the ratio 65% over 35%)

However, this doesn’t PyTorch is better!

At the end of the day, it comes down to what you would like to code with and what your organization requires!

I use PyTorch at home but TensorFlow at work!

| Comparison Factors | TensorFlow | PyTorch |

| Ramp-Up Time | W | |

| Graph Creation And Debugging | W | |

| Coverage | W | |

| Serialization | W | |

| Deployment | W | |

| Documentation | Tie | Tie |

| Device Management | W | |

| Custom Extensions | W |

Check out this interesting blog on Artificial Intelligence with Deep Learning!

I personally believe that both TensorFlow and PyTorch will revolutionize all aspects of Deep Learning ranging from Virtual Assistance all the way till driving you around town. It will be easy and subtle and have a big impact on Deep Learning and all the users!

I hope you have enjoyed my comparison blog on PyTorch v/s Tensorflow. If you have any questions, do mention it in the comments section and I will reply to you guys ASAP!

After reading this blog on PyTorch vs TensorFlow, I am pretty sure you want to know more about PyTorch, soon I will be coming up with a blog series on PyTorch. To know more about TensorFlow you can refer the following blogs:

Discover your full abilities in becoming an AI and ML professional through our Artificial Intelligence Course. Learn about various AI-related technologies like Machine Learning, Deep Learning, Computer Vision, Natural Language Processing, Speech Recognition, and Reinforcement learning.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co