Microsoft Azure Data Engineering Training Cou ...

- 15k Enrolled Learners

- Weekend

- Live Class

Before moving ahead in this HDFS tutorial blog, let me take you through some of the insane statistics related to HDFS:

Too hard to digest? Right. As discussed in Hadoop Tutorial, Hadoop has two fundamental units – Storage and Processing. When I say storage part of Hadoop, I am referring to HDFS which stands for Hadoop Distributed File System. So, in this blog, I will be introducing you to HDFS.

Here, I will be talking about:

Before talking about HDFS, let me tell you, what is a Distributed File System?

Distributed File System talks about managing data, i.e. files or folders across multiple computers or servers. In other words, DFS is a file system that allows us to store data over multiple nodes or machines in a cluster and allows multiple users to access data. So basically, it serves the same purpose as the file system which is available in your machine, like for windows you have NTFS (New Technology File System) or for Mac you have HFS (Hierarchical File System). The only difference is that, in case of Distributed File System, you store data in multiple machines rather than single machine. Even though the files are stored across the network, DFS organizes, and displays data in such a manner that a user sitting on a machine will feel like all the data is stored in that very machine.

Hadoop Distributed file system or HDFS is a Java based distributed file system that allows you to store large data across multiple nodes in a Hadoop cluster. So, if you install Hadoop, you get HDFS as an underlying storage system for storing the data in the distributed environment.

Let’s take an example to understand it. Imagine that you have ten machines or ten computers with a hard drive of 1 TB on each machine. Now, HDFS says that if you install Hadoop as a platform on top of these ten machines, you will get HDFS as a storage service. Hadoop Distributed File System is distributed in such a way that every machine contributes their individual storage for storing any kind of data.

When you access Hadoop Distributed file system from any of the ten machines in the Hadoop cluster, you will feel as if you have logged into a single large machine which has a storage capacity of 10 TB (total storage over ten machines). What does it mean? It means that you can store a single large file of 10 TB which will be distributed over the ten machines (1 TB each). So, it is not limited to the physical boundaries of each individual machine.

Because the data is divided across the machines, it allows us to take advantage of Distributed and Parallel Computation. Let’s understand this concept by the above example. Suppose, it takes 43 minutes to process 1 TB file on a single machine. So, now tell me, how much time will it take to process the same 1 TB file when you have 10 machines in a Hadoop cluster with similar configuration – 43 minutes or 4.3 minutes? 4.3 minutes, Right! What happened here? Each of the nodes is working with a part of the 1 TB file in parallel. Therefore, the work which was taking 43 minutes before, gets finished in just 4.3 minutes now as the work got divided over ten machines.

Because the data is divided across the machines, it allows us to take advantage of Distributed and Parallel Computation. Let’s understand this concept by the above example. Suppose, it takes 43 minutes to process 1 TB file on a single machine. So, now tell me, how much time will it take to process the same 1 TB file when you have 10 machines in a Hadoop cluster with similar configuration – 43 minutes or 4.3 minutes? 4.3 minutes, Right! What happened here? Each of the nodes is working with a part of the 1 TB file in parallel. Therefore, the work which was taking 43 minutes before, gets finished in just 4.3 minutes now as the work got divided over ten machines.

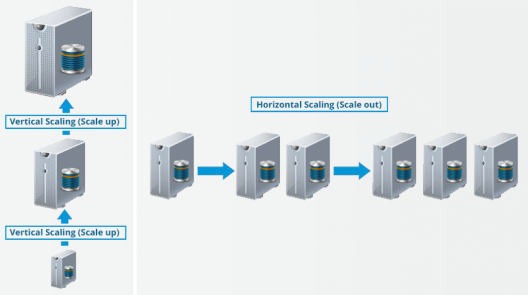

Last but not the least, let us talk about the horizontal scaling or scaling out in Hadoop. There are two types of scaling: vertical and horizontal. In vertical scaling (scale up), you increase the hardware capacity of your system. In other words, you procure more RAM or CPU and add it to your existing system to make it more robust and powerful. But there are challenges associated with vertical scaling or scaling up:

In case of horizontal scaling (scale out), you add more nodes to existing cluster instead of increasing the hardware capacity of individual machines. And most importantly, you can add more machines on the go i.e. Without stopping the system. Therefore, while scaling out we don’t have any down time or green zone, nothing of such sort. At the end of the day, you will have more machines working in parallel to meet your requirements.

You may check out the video given below where all the concepts related to HDFS has been discussed in detail:

We will understand these features in detail when we will explore the HDFS Architecture in our next HDFS tutorial blog. But, for now, let’s have an overview on the features of HDFS:

So now, you have a brief idea about HDFS and its features. But trust me guys, this is just the tip of the iceberg. In my next HDFS tutorial blog, I will deep dive into the HDFS architecture and I will be unveiling the secrets behind the success of HDFS. Together we will be answering all those questions which are pondering in your head such as:

Now that you have understood HDFS and its features, check out the Hadoop training by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka Big Data Hadoop Certification Training course helps learners become expert in HDFS, Yarn, MapReduce, Pig, Hive, HBase, Oozie, Flume and Sqoop using real-time use cases on Retail, Social Media, Aviation, Tourism, Finance domain.

Got a question for us? Please mention it in the comments section and we will get back to you.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co

Is it good with storage of binary data as well e.g. large executable, compressed files, VDI etc?

By good I meant performance wise w.r.t get/put operations, block storage, replication etc?

It has Awesome Explaination in a precise way. Pls do suggest How to start Big data Thing .

Thank You so Much .. :)

Hey @disqus_FHgyUZw5vC:disqus, check out our Big Data course here: https://www.edureka.co/big-data-hadoop-training-certification

This course will help you truly master the Big Data Hadoop technology in and out. Do check it out. Cheers :)

Nice blog

Hey Neelesh, thanks for checking out our blog. We’re glad you found it useful.

You might also like our tutorials here: https://www.youtube.com/edurekaIN

Do subscribe to stay posted on upcoming blogs and videos. Cheers!

Edureka..! is giving such a visualization where reading article in hadoop makes u like talking with different persons as a part of concept ..finally it makes u to feel as BIG DATA NOT A BIG DEAL.

Thanks a lot .

Hey Malli Arjun, thanks for checking out our blog. We’re glad you found it useful.

You might also like our tutorials here: https://www.youtube.com/edurekaIN. You can also check out our complete training here: https://www.edureka.co/big-data-hadoop-training-certification.

Do subscribe to stay posted on upcoming blogs and videos. Cheers!

Every part is explained well, but for better following of your speech should to be attached an English subtitle in blog’s YT video.

Hey Velimir, thanks for checking out our blog. We’re glad you found it useful. We have noted your feedback and passed it on to the relevant team. In fact, we’re working on including sub titles in videos. Do follow our blog to stay updated on upcoming posts. Cheers!

This blog gives a nice and precise introduction to HDFS.

Hey Abhishek, thanks for checking out the blog! We’re glad you liked it. Do spread the word and subscribe to stay posted on upcoming blogs. Cheers!

Nice pick up for beginners

We’re glad you found the blog useful, Rishav! Do subscribe to our blog to stay posted on upcoming blogs. Cheers!