PySpark Certification Training Course

- 12k Enrolled Learners

- Weekend/Weekday

- Live Class

Hadoop is one of the most prestigious front-line project taken up by the Apache foundation. The future of the IT industry solely relies on this exceptional technology, and the Hadoop developers have some serious roles and responsibilities. In this article, we shall discuss the important the Job Roles and Responsibilities of a Hadoop Developer in the following order.

A Hadoop Developer is basically a programmer with good knowledge of the Hadoop Ecosystem. The job of a Hadoop developer is to design, develop and deploy Hadoop Applications with strong documentation skills.

The Hadoop Developer Designs, Builds, Installs, Configures and Supports Hadoop System. He Translates complex functional and technical requirements into detailed documentation and performs analysis of vast data stores to uncover insights by maintaining security and data privacy.

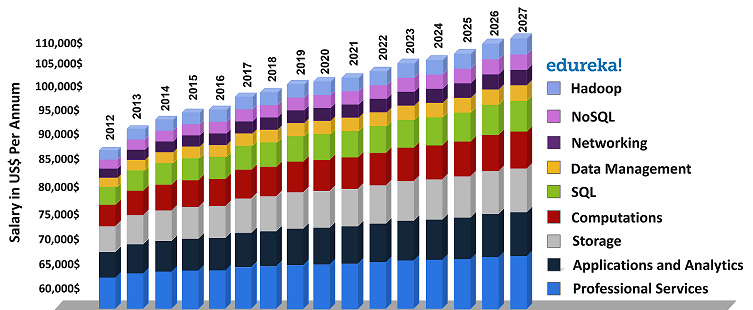

Hadoop Developer is one of the most highly paid profiles in current IT Industry. The Salary trends for a Hadoop developer have increased decently since last few years. Let us check out the raise in the salary trends for Hadoop developers compared to other profiles.

Let us now discuss the salary trends for a Hadoop Developer in different countries based on the experience. Firstly, let us consider the United States of America. Based On Experience, the big data professionals working in the domains are offered with respective salaries as described below.

The freshers or entry-level salaries start at 75,000 US$ to 80,000 US$ while the candidates with considerable experience are being offered 125,000 US$ to 150,000 US$ per annul.

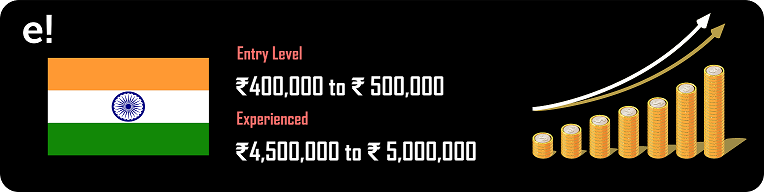

Followed by the United Nations of America, we will now discuss the Hadoop Developer Salary Trends in India.

The Salary trends for a Hadoop Developer in India for the freshers or entry-level salaries start at 400,00 INR to 500,000 INR while the candidates with considerable experience are being offered 4,500,000 INR to 5,000,000 INR.

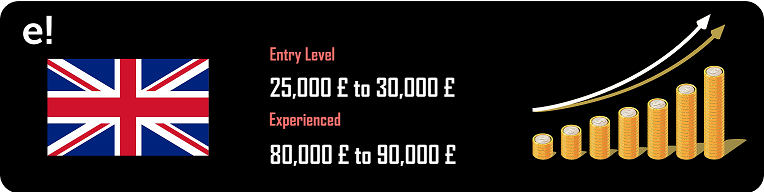

Next, let us take a look at the salary trends for Hadoop Developers in the United Kingdom.

The Salary trends for a Hadoop Developer in India for the freshers or entry-level salaries start at 25,000 Pounds to 30,000 Pounds and on the other hand, for an experienced candidate, the salary offered is 80,000 Pounds to 90,000 Pounds.

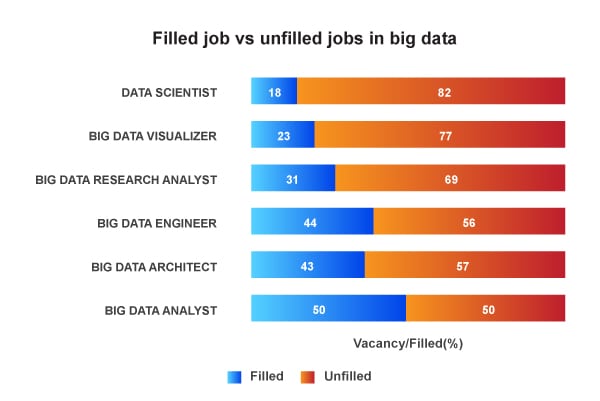

The number of Hadoop jobs has increased at a peak rate since last few years.

about 50,000 vacancies for Hadoop Developers are available in India.

India is the contributor of 12% of Hadoop Developer jobs in the worldwide market.

The number of offshore jobs in India can increase at a rapid pace due to outsourcing.

Many big MNCs in India are offering handsome salaries for Hadoop Developers in India.

80% of market employers are looking for Big Data experts.

The Big Data Analytics market in India is currently valued at $2 Billion and is expected to grow at a CAGR of 26 per cent reaching approximately $16 Billion by 2025, making India’s share approximately 32 per cent in the overall global market.

This contributes to one-fifth of India’s KPO market worth $5.6 billion. Also, The Hindu predicts that by the end of 2019, India alone will face a shortage of close to three lakh Data Scientists. This presents a tremendous career and growth opportunity.

This skill gap in Big Data can be bridged through comprehensive learning of Apache Hadoop that enables professionals and freshers alike, to add the valuable Big Data skills to their profile.

Unleash the power of distributed computing and scalable data processing with our Apache Spark Certification.

Basic knowledge of Hadoop and its Eco-System

Able to work with Linux and execute some of the basic commands

Hadoop technologies like MapReduce, Pig, Hive, HBase

Ability to handle Multi-Threading and Concurrency in the Eco-System

The familiarity of ETL tools and Data Loading tools like Flume and Sqoop

Experienced with Scripting Languages like Pig Latin

Now, we will move into the key topic of this article, the “Roles and Responsibilities of Hadoop Developer“. Every company has its own operations running on its data and the developers associated will also have to fulfil the respective roles accordingly.

Understanding the basic suit that provides the solution to big data is mandatory. The basic components of Hadoop, which are the HDFS and MapReduce should be learnt. Later comes the data accessing tools like Hive, Pig, Sqoop. And finally, mastering the data management and monitoring tools like Flume, ZooKeeper, Oozie.

If you are stepping into the world of Hadoop, then chances are there that you might start learning Hadoop using any of the popular distributions like Cloudera CDH and the Hortonworks HDP.

These platforms help you speedup learning by providing a tailor-made platform where most of the services in the Hadoop ecosystem are already running. You can start writing MapReduce jobs right away.

These distributions mainly use one of the Linux flavours. For example, Cloudera CDH uses CentOS. Having some comfortable understanding of Linux and its flavours will help to smoothen your learning curve.

I strongly recommend gaining some competency in Linux while or before learning Hadoop.

Hadoop is the technology that the future relies on. Hence, making real-time and data-driven decisions happens to be a crucial move. This can only be achieved when you get real-time hands-on experience with the Hadoop systems

The main motto of Hadoop is to provide High-Performance oriented data systems. This can be done by coding the Data processing algorithms like MapReduce in an efficient way and balancing the load among all the available nodes in the cluster.

Data is being generated every single second. The sources are limitless. Every single piece of electronic equipment generates data. starting from the sensor to the satellite. Hadoop system should be capable enough to load in the data from multiple sources for the domain it is designed for.

These 5 stages in a Hadoop System are crucial, A Hadoop Developer should be capable to install, build and configure a Hadoop System. This happens to be the basic requirement for every Hadoop developer.

Hadoop is the most wide-spread technology used at a global level and different companies using it have their own requirements. A Hadoop Developer should be capable enough to decode the requirements and elucidate the technicalities of the project to the clients

Hadoop is undoubtedly the technology that enhanced data processing capabilities. It changed the face of customer-based companies. Based on the artificially intelligent systems, it is now possible to identify customer requirements and make decisions with little human interaction.

It becomes a crucial role for every Hadoop developer to own the skill to analyze and generate the respective reports based on the collected data.

Every company has its own gravity for its data. It might be sensitive information like customer data, transactions made, company’s confidential information. It becomes the priority of every Hadoop Developer to maintain strong Data Security and Privacy to protect the company from potential cyber threats.

Hadoop stands superior when it comes to performance and reliability. Hadoop Developers are should be able to design the Hadoop System to be highly scalable and withstand any unexpectable calamities that are prone to data loss.

For example, the Hadoop System should always maintain a standby node which can take up the position of the current node when the current node encounters and unexpected failure.

The major role of any Hadoop Developer is to make sure that there is enough memory available both on the Query-end and the Server-end. The Buffers, Indexes and the Joins play a key role while dealing with a huge amount of data.

In case if enough memory is not available to the system, then there are possibilities that the system might tend to slow down.

HBase is designed and developed to host huge tables of data for interactive and batch-mode analytics by running NoSQL o top of Hadoop. Its parallel data accessing ability in distributed systems make it the most capable, fastest and scalable data store for Hadoop Framework.

Having sound knowledge in working with HBase would be an added weight on a Hadoop Developer’s Resume.

Apache ZooKeeper provides the best in class service to connect, coordinate and maintain a Hadoop Cluster. It gets in synchronization with all the nodes in a cluster using the most robust techniques and providing the simplest User Interface.

Being comfortable with ZooKeeper Services would be beneficial for a Hadoop Developer to design any Hadoop cluster with strong connectivity.

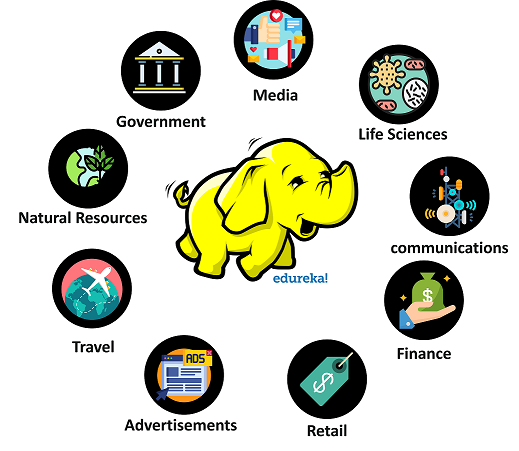

Now, let us discuss the different business sectors that are looking for Hadoop Developers.

Hadoop is in use under many business sectors. Let us have an overview. The possible sectors where Hadoop Developers play an important role are limitless. Few of them are,

With this, we come to an end of this article. I hope I have thrown some light on to your knowledge on a Hadoop Developer along with skills required, roles and responsibilities, job trends and Salary trends,

Now that you have understood Big data and its Technologies, check out the Hadoop training by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka Big Data Hadoop Certification Training course helps learners become expert in HDFS, Yarn, MapReduce, Pig, Hive, HBase, Oozie, Flume and Sqoop using real-time use cases on Retail, Social Media, Aviation, Tourism, Finance domain.

If you have any query related to this “Hadoop Developer Roles and Responsibilities” article, then please write to us in the comment section below and we will respond to you as early as possible or join our Hadoop Training in Pondicherry.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co