Analytics is being performed by almost all organisations now so that they can make decisions based on hard facts instead of on guesswork. All types of analytics require huge amounts of data to be collected and prepared for analysis. This data can be available within the organisation or sourced from outside. Firms generate data as part of their regular activities. But the information may be available in different places and in different forms. The process of collecting, cleaning, storing and analysing data is known as data handling.

The Advanced Executive Certificate Course In Product Management deals in detail with the use of data for finding insights that help companies make better decisions. One can learn more about this programme and how it benefits them from our website. Let us now understand what this process is and the different frameworks used for it.

What Is Data Handling?

Data handling is the process of collecting, cleaning, organising and analysing data available in a firm or from outside sources. Huge amounts of data, popularly called big data, are collected and analysed by organisations to uncover valuable insights. These insights help the firm understand the reason for various events in the past and predict outcomes in the future. Data analytics helps companies make better decisions. Data handling requires the service of experts in the field and various tools that help in the process. To understand the function better, we must look at each part of the process in detail.

Data Collection

It is the first step in data handling. Data is available from various sources, and the analysts must collect them before they can use them. Before they collect the data, experts must decide the desired outcome of data analysis. It will help them know the kind of information they must collect and where they will be available. The next step is deciding what methods will be used to collect and process the data. This activity is necessary because information, as they exist, is useless. They must be collected and cleaned for analysis.

Information may be collected using various methods like surveys, transactional tracking, interviews, observation, forms or social media monitoring. Two methods of data collection are popularly used. The primary method is the first collection of data performed by researchers before anyone has done any process. This method is expensive but more accurate. In the secondary method, experts collect data that has already undergone processing. This method is inexpensive but may need to be more accurate. It can be said to be second-hand information.

Data Cleaning

Data cleaning or cleansing is the job of removing corrupt, incorrect, duplicate, wrongly formatted or incomplete data from the collected data. This process is very important in data handling because if the information is not correct, the outcome of the analysis will also be wrong. It can have serious implications for the decisions the company management takes. Data cleaning should not be mistaken for transformation because that is the task of converting data from one format to another. That process is used for converting the format for storing or analysing purposes.

There are different steps in data cleaning, and they are as follows:

- Removing Duplicate Data

The first step in cleansing is to remove duplicate or irrelevant observations from the data. Irrelevant data refers to information that you don’t need for the analysis. When collecting data from various sources, data duplication may exist. These must be removed, which is one of the critical steps in data cleansing.

- Fix Structural Errors

These occur as naming errors, typos or wrong capitalisations. These must be corrected as these can cause mislabelled categories or classes. It is an important step in data handling as these can affect the outcome of the analytics.

- Filter Unwanted Outliers

When observing the data, you may find details that don’t fit into the data you are analysing. Removing such information will make your outcome more accurate. Not all outliers are incorrect, and this makes it necessary to make the right decision here.

- Taking Care Of Missing Data

Taking care of missing data is essential because most algorithms will not accept missing values. One way to take care of this is to remove the observation with missing values. The other way is to input the missing value based on the other observations. It is also possible to alter the way you use the data.

- Validate And Quality Control

At this stage, it is necessary to see that you can answer some questions. You must confirm that the data makes sense and follows the rules for its field. It is also essential to see if the information proves or disproves your theory or brings out any insight. You must also ensure that you can find trends in the data.

Also Read: Product Management: A Beginner’s Guide

Data Processing

It is the step in which the raw data is input to get valuable insights. For small data sets, You may use manual techniques like SQL Queries or Excel. The manual processes are slow and may only sometimes be accurate. But when the data to be processed is huge, data analysts use machine learning and artificial intelligence for this purpose. Once the experts complete processing the data, they can uncover valuable insights that make decision-making more accurate.

Data Visualisation

Once the experts complete processing the data, the results are available in a form that can be read only by data experts. They need to make it into information that others can easily read and understand. The specialists use visualisation tools to convert the results into graphs, charts or reports that everyone can read.

Data Storage

It is essential to store the data in a way that the company can easily access it for reference. It is important to ensure that such information is not accessible to unauthorised persons. Such security measures must be put in place by the organisation.

We have seen the data handling process and what it involves. Let us now see how the experts handle unstructured data to get the necessary insights. These are dealt with in detail in the Advanced Executive Certificate Course In Product Management offered by reputed institutions. You can visit our web portal to learn more about this program.

Handling Unstructured Data

It is possible to process unstructured data also but with a different process. Unstructured data occurs in the form of audio files, images, application logs, JSON and CSV exports, and BLOBs. The process for using these types of data is as below.

Collection And Preparation

The first thing a company must do is to decide what sources to include for the collection of unstructured data. As much as possible, you must validate the data and remove any incomplete data.

Moving The Data

You cannot add unstructured data to a relational database, and hence you must move it to a big data setting. Usually, such data is moved to data handling frameworks like Hadoop.

Data Exploration

It is possible that the analysing team may not have a clear picture of the extent of available data. Exploration is a kind of preliminary analytics that helps the team clearly understand the contents of the data set and identify analytics objectives. It is an essential step for unstructured data when working with large data sets.

Introducing Structure To Unstructured Data

Metadata Analysis – Metadata is structured data, and hence it can be processed as it exists. Analysing it helps to understand the contents of the unstructured data.

Regular Expression – This is a method of recognising data that mean the same. People may express dates in different formats. But they all convey the same meaning. Regular expression helps to simplify unstructured data that convey the same thing.

Tokenisation – The occurrence of common patterns can be discovered using this method. There may be phrases that are repeated many times in a text. These are identified using tokens, and these tokens can be combined to create a semantic structure.

Segmentation – This process identifies data that can be grouped. For example, information that was generated on the same day can be grouped for analysis.

When all the above processes are complete, the data is ready for analysis and visualisation. These are done similarly as performed for structured data.

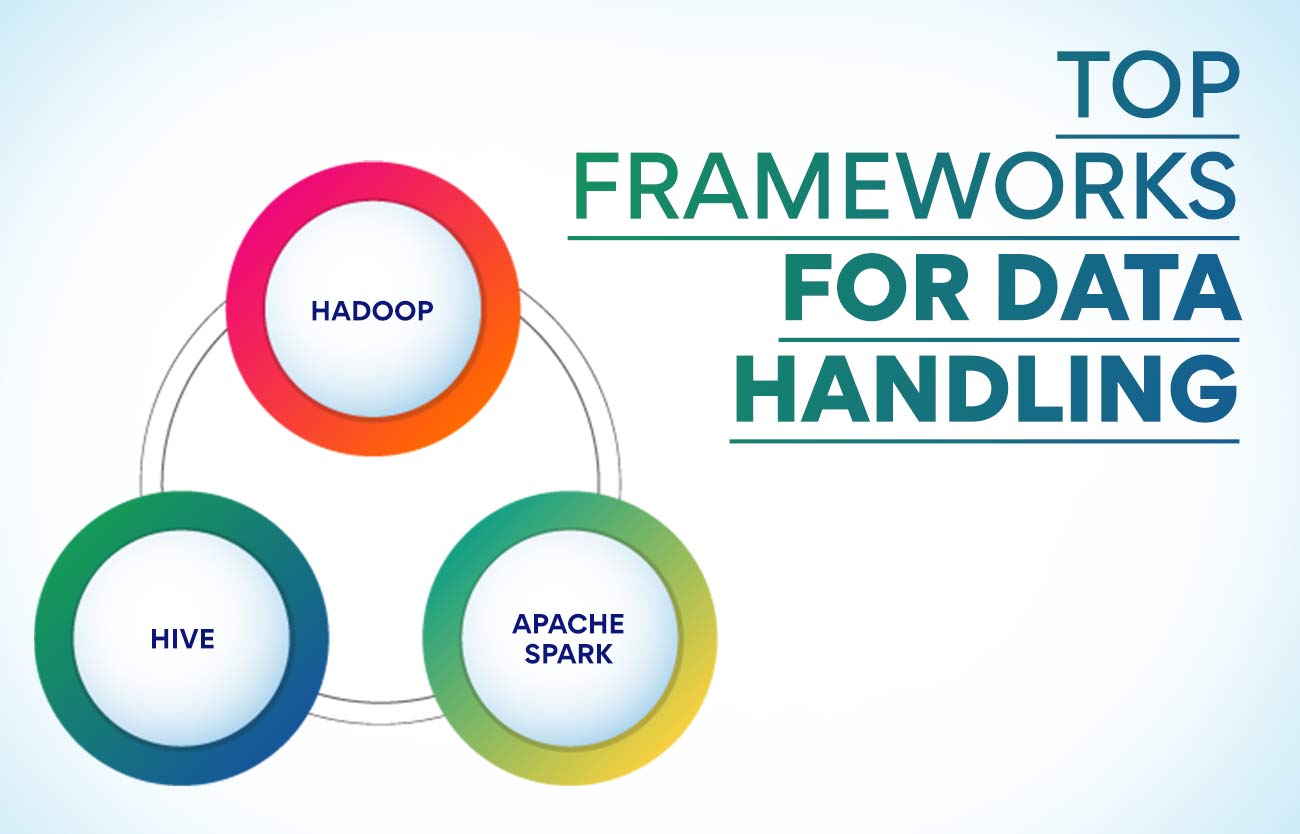

Top Frameworks For Data Handling

Hadoop

Hadoop is a framework that is good for scalable, distributed and reliable calculations. This framework can store and process large amounts of data. Hadoop depends on computer clusters and modules that are created with the assumption that the hardware will eventually fail. The framework will handle such failures. This framework splits files into large data blocks, and these are distributed across nodes in a cluster. The method of processing data in the nodes improves efficiency and speed. This data handling framework works at the onsite data centre and through the cloud.

Hadoop has four different modules. Hadoop Common stores the libraries and utilities needed by the other modules. The Hadoop Distributed File System (HDFS) stores the data. Hadoop Yet Another Resource Negotiator (YARN) is the resource management system that handles the computing resources in the clusters and the scheduling of users’ applications. MapReduce involves the implementation of the MapReduce model for large-scale data processing. It is a great framework for customer analytics, enterprise projects and the creation of data lakes. This data handling tool is good for batch processing and integrates with most other big data frameworks.

Also Read: How To Become A Better Product Manager: Top 10 Tips

Apache Spark

Apache Spark is another batch processing framework that can perform stream processing, also making it a hybrid framework. It is easy to use, and one can write applications in Java, Scala, Python and R. This framework is good for machine learning but with a drawback that it requires a cluster manager and distributed storage system. You can run Spark on one machine with an Executor per CPU core. This open-source cluster computing framework can be used as a standalone framework or in combination with Hadoop or Apache Mesos.

This data handling framework uses a data structure called Resilient Distributed Dataset (RDD). This read-only multiset of data items is distributed across the complete cluster of machines. It works as a set of distributed programs providing a limited form of distributed shared memory. Spark can access sources like HDFS, Cassandra, HBase and S3. Spark Core acts as the foundation of Spark. It uses two forms of variables called broadcast variables and accumulators. Spark Core contains other elements like Spark SQL, Spark Streaming, Spark MLlib and Graph X.

Hive

Facebook created Apache Hive, which is a framework that converts SQL requests into MapReduce tasks. This framework contains three components. The Parser is the element that sorts the incoming SQL requests. The Optimiser is another component that optimises the SQL requests for better efficiency. The other element in Hive is the Executor, which launches tasks in the MapReduce framework. It is possible to integrate this framework with Hadoop as a server part for processing large data volumes.

Even ten years after its release, Hive is a data handling framework that has held its position of being the framework used most for big data analytics. Hortonworks released Hive 3 in 2018 when it switched the search engine from MapReduce to Tez. This framework has excellent machine-learning capabilities. You can integrate Hive with most other big data frameworks.

These are just a few top frameworks that are popularly used for data processing. You can learn more about data handling frameworks in the Advanced Executive Certificate Course In Product Management conducted by reputed institutions. More details about this course and how it benefits professionals handling products can be had from our website.

Summing Up

Data handling is an essential process in all organisations today. Using the available information to arrive at valuable insights allows companies to make better decisions and achieve their goals quickly. The results of the process depend on the right selection of data and how they are processed. The data processing frameworks are not made to work as one-size-fits-all solutions, and hence it will be wise to choose a different framework for each process. Getting the desired results depends on the capability of the expert that handles the job.

More Information:

What Are The Stages And Examples Of A Product Lifecycle?

A Guide To Formulate An Excellent Product Strategy

_1668670867.jpg)