PySpark Certification Training Course

- 12k Enrolled Learners

- Weekend/Weekday

- Live Class

In today’s world, data is the main ingredient of internet applications and typically encompasses the following :

This data can be used to run analytics in real time serving various purposes, some of which are:

Problem: Collecting all the data is not easy as data is generated from various sources in different formats

Solution: One of the ways to solve this problem is to use a messaging system. Messaging systems provide a seamless integration between distributed applications with the help of messages.

Apache Kafka :

Apache Kafka is a distributed publish subscribe messaging system which was originally developed at LinkedIn and later on became a part of the Apache project. Kafka is fast, agile, scalable and distributed by design.

Kafka Architecture and Terminology :

Topic : A stream of messages belonging to a particular category is called a topic

Producer : A producer can be any application that can publish messages to a topic

Consumer : A consumer can be any application that subscribes to topics and consumes the messages

Broker : Kafka cluster is a set of servers, each of which is called a broker

Kafka is scalable and allows creation of multiple types of clusters.

What’s the role of ZooKeeper ?

Each Kafka broker coordinates with other Kafka brokers using ZooKeeper. Producers and Consumers are notified by the ZooKeeper service about the presence of new brokers or failure of the broker in theKafka system.

Single Node Multiple Brokers

Multiple Nodes Multiple Brokers

Kafka @ LinkedIn

LinkedIn Newsfeed is powered by Kafka

LinkedIn recommendations are powered by Kafka

LinkedIn recommendations are powered by Kafka

LinkedIn notifications are powered by Kafka

Note: Apart from this, LinkedIn uses Kafka for many other tasks like log monitoring, performance metrics, search improvement, among others.

Who else uses Kafka ?

DataSift: DataSift uses Kafka as a collector of monitoring events and to track users’ consumption of data streams in real time

Wooga: Wooga uses Kafka to aggregate and process tracking data from all their Facebook games (hosted at various providers) in a central location

Spongecell: Spongecell uses Kafka to run its entire analytics and monitoring pipeline driving both real time and ETL applications

Loggly : Loggly is the world’s most popular cloud-based log management. It uses Kafka for log collection.

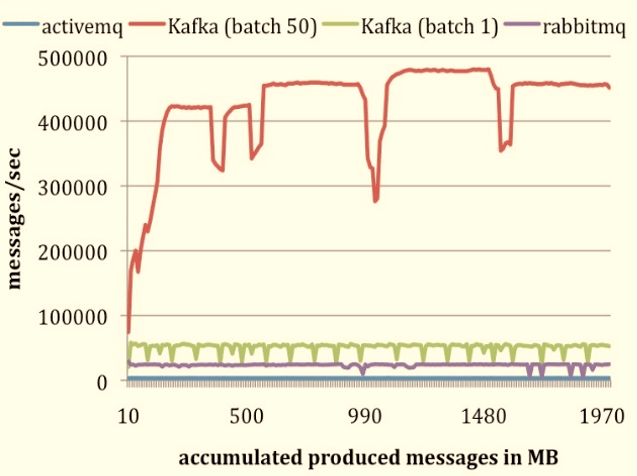

Comparative Study: Kafka vs. ActiveMQ vs. RabbitMQ

Kafka has a more efficient storage format.On an average, each message has an overhead of 9 bytes in Kafka, versus 144 bytes in ActiveMQ

In both ActiveMQ and RabbitMQ, brokers maintain delivery state of every message by writing to disk but in the case of Kafka, there is no disk write, hence making it faster.

In both ActiveMQ and RabbitMQ, brokers maintain delivery state of every message by writing to disk but in the case of Kafka, there is no disk write, hence making it faster.

With the wide adoption of Kafka in production, it looks to be a promising solution for solving real world problems. Apache Kafka training can help you get ahead of your peers in a real-time analytics career. Get started with an Apache Kafka tutorial here.

Got a question for us? Please mention it in the comments section and we will get back to you or join our Kafka training today.

Related Posts:

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co

i have one doubt , i understood kafka like each node is called a kafka broker, if so how you defined ” Single Node Multiple Brokers” above diagram? please explain