PySpark Certification Training Course

- 12k Enrolled Learners

- Weekend/Weekday

- Live Class

Apache Hive is one of the most important frameworks in the Hadoop ecosystem, in-turn making it very crucial for Hadoop Certification. In this blog, we will learn about Apache Hive and Hive installation on Ubuntu.

Apache Hive is a data warehouse infrastructure that facilitates querying and managing large data sets which resides in distributed storage system. It is built on top of Hadoop and developed by Facebook. Hive provides a way to query the data using a SQL-like query language called HiveQL(Hive query Language).

Internally, a compiler translates HiveQL statements into MapReduce jobs, which are then submitted to Hadoop framework for execution.

Hive looks very much similar like traditional database with SQL access. However, because Hive is based on Hadoop and MapReduce operations, there are several key differences:

As Hadoop is intended for long sequential scans and Hive is based on Hadoop, you would expect queries to have a very high latency. Itmeans that Hive would not be appropriate for those applications that need very fast response times, as you can expect with a traditional RDBMS database.

Finally, Hive is read-based and therefore not appropriate for transaction processing that typically involves a high percentage of write operations.

Learn more about Big Data and its applications from the Data Engineering Training.

Please follow the below steps to install Apache Hive on Ubuntu:

Step 1: Download Hive tar.

Command: wget http://archive.apache.org/dist/hive/hive-2.1.0/apache-hive-2.1.0-bin.tar.gz

Step 2: Extract the tar file.

Command: tar -xzf apache-hive-2.1.0-bin.tar.gz

Command: ls

Command: sudo gedit .bashrc

Add the following at the end of the file:

# Set HIVE_HOME

export HIVE_HOME=/home/edureka/apache-hive-2.1.0-bin

export PATH=$PATH:/home/edureka/apache-hive-2.1.0-bin/bin

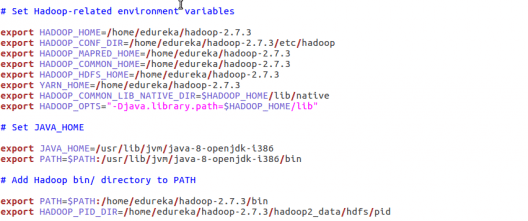

Also, make sure that hadoop path is also set.

Run below command to make the changes work in same terminal.

Command: source .bashrc

Step 4: Check hive version.

Step 5: Create Hive directories within HDFS. The directory ‘warehouse’ is the location to store the table or data related to hive.

Command:

Step 6: Set read/write permissions for table.

Command:

In this command, we are giving write permission to the group:

Step 7: Set Hadoop path in hive-env.sh

Command: cd apache-hive-2.1.0-bin/

Command: gedit conf/hive-env.sh

Set the parameters as shown in the below snapshot.

Step 8: Edit hive-site.xml

Command: gedit conf/hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to You under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:derby:;databaseName=/home/edureka/apache-hive-2.1.0-bin/metastore_db;create=true</value> <description> JDBC connect string for a JDBC metastore. To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL. For example, jdbc:postgresql://myhost/db?ssl=true for postgres database. </description> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>hive.metastore.uris</name> <value/> <description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>org.apache.derby.jdbc.EmbeddedDriver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.PersistenceManagerFactoryClass</name> <value>org.datanucleus.api.jdo.JDOPersistenceManagerFactory</value> <description>class implementing the jdo persistence</description> </property> </configuration>

Learn more about Big Data and its applications from the Data Engineering Course in London.

Step 9: By default, Hive uses Derby database. Initialize Derby database.

Command: bin/schematool -initSchema -dbType derby

Step 10: Launch Hive.

Command: hive

Step 11: Run few queries in Hive shell.

Command: show databases;

Command: create table employee (id string, name string, dept string) row format delimited fields terminated by ‘ ‘ stored as textfile;

Command: show tables;

Step 12: To exit from Hive:

Command: exit;

Now that you are done with Hive installation, the next step forward is to try out Hive commands on Hive shell. Hence, our next blog “Top Hive Commands with Examples in HQL” will help you to master Hive commands.

Gain hands-on experience in building and managing data storage, processing, and analytics solutions with the Microsoft Azure Data Engineering Certification Course (DP-203)

Related Posts:

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co

when i type hive –version command in terminal

error message given : Missing Hive Execution Jar: /home/rishi/apache-hive-2.1.0-bin/bin/lib/hive-exec-*.jar

I am facing a problem to launch HIVE

after typing hive command

the result is:

AarekhRana:/usr/local/hive$ hive(SessionState.java:410) (SessionState.java:386) (CliSessionState.java:60)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.hadoop.security.authentication.util.KerberosUtil (file:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.9.0.jar) to method sun.security.krb5.Config.getInstance()

WARNING: Please consider reporting this to the maintainers of org.apache.hadoop.security.authentication.util.KerberosUtil

WARNING: Use –illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Hive Session ID = 9abe20d7-31b6-4909-b630-141169d521bc

Exception in thread “main” java.lang.ClassCastException: class jdk.internal.loader.ClassLoaders$AppClassLoader cannot be cast to class java.net.URLClassLoader (jdk.internal.loader.ClassLoaders$AppClassLoader and java.net.URLClassLoader are in module java.base of loader ‘bootstrap’)

at org.apache.hadoop.hive.ql.session.SessionState.

at org.apache.hadoop.hive.ql.session.SessionState.

at org.apache.hadoop.hive.cli.CliSessionState.

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.hadoop.util.RunJar.run(RunJar.java:239)

at org.apache.hadoop.util.RunJar.main(RunJar.java:153)

what should I do?

Hello Edureka, great work with the tutorial.

Really Awesome it’s working. Wonder-full tutorial for installation

I get the below error when I try to initialize the derby database

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLException : No suitable driver found for jdbc:derby:;databaseName=file:///scratch/thirdeye/software/apache-hive-2.1.0-bin/metastore_db;create=true

SQL Error code: 0

I see derby-10.10.2.0.jar in $HIVE_HOME/lib . What could be the reason for this exception?

i am getting error in step number 9

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hduser/hive/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=/home/hduser/hive/apache-hive-2.1.0-bin/metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLException : Failed to create database ‘/home/hduser/hive/apache-hive-2.1.0-bin/metastore_db’, see the next exception for details.

SQL Error code: 40000

Use –verbose for detailed stacktrace.

*** schemaTool failed ***

Please help

I’m trying to create external tables in redshift using HIVE->S3… can you put some light on this? help much appreciated

the default files within the directory “/apache-hive-2.3.2-bin/conf” don’t include a file named as “hive-site.xml” so in my case i have just created the file with the name and simply configure it as in the post. Then i have no problem to lunch hive shell and use the Derby database to create tables and data inside.