Microsoft Azure Data Engineering Training Cou ...

- 15k Enrolled Learners

- Weekend

- Live Class

In this blog, I am going to talk about Apache Hadoop HDFS Architecture. HDFS & YARN are the two important concepts you need to master for Hadoop Certification. You know that HDFS is a distributed file system that is deployed on low-cost commodity hardware. So, it’s high time that we should take a deep dive into Apache Hadoop HDFS Architecture and unlock its beauty.

This Edureka Big Data & Hadoop Full Course video will help you to Learn Data Analytics Concepts and also guide you how to became a Big Data Analytics Engineer

The topics that will be covered in this blog on Apache Hadoop HDFS Architecture are as follows:

Apache HDFS or Hadoop Distributed File System is a block-structured file system where each file is divided into blocks of a pre-determined size. These blocks are stored across a cluster of one or several machines. Apache Hadoop HDFS Architecture follows a Master/Slave Architecture, where a cluster comprises of a single NameNode (Master node) and all the other nodes are DataNodes (Slave nodes). HDFS can be deployed on a broad spectrum of machines that support Java. Though one can run several DataNodes on a single machine, but in the practical world, these DataNodes are spread across various machines.

NameNode is the master node in the Apache Hadoop HDFS Architecture that maintains and manages the blocks present on the DataNodes (slave nodes). NameNode is a very highly available server that manages the File System Namespace and controls access to files by clients. I will be discussing this High Availability feature of Apache Hadoop HDFS in my next blog. The HDFS architecture is built in such a way that the user data never resides on the NameNode. The data resides on DataNodes only.

Understand the various properties of Namenode, Datanode and Secondary Namenode from the Hadoop Administration Course.

DataNodes are the slave nodes in HDFS. Unlike NameNode, DataNode is a commodity hardware, that is, a non-expensive system which is not of high quality or high-availability. The DataNode is a block server that stores the data in the local file ext3 or ext4.

Till now, you must have realized that the NameNode is pretty much important to us. If it fails, we are doomed. But don’t worry, we will be talking about how Hadoop solved this single point of failure problem in the next Apache Hadoop HDFS Architecture blog. So, just relax for now and let’s take one step at a time.

Learn more about Big Data and its applications from the Azure Data Engineer course

Apart from these two daemons, there is a third daemon or a process called Secondary NameNode. The Secondary NameNode works concurrently with the primary NameNode as a helper daemon. And don’t be confused about the Secondary NameNode being a backup NameNode because it is not.

Hence, Secondary NameNode performs regular checkpoints in HDFS. Therefore, it is also called CheckpointNode.

Now, as we know that the data in HDFS is scattered across the DataNodes as blocks. Let’s have a look at what is a block and how is it formed?

Blocks are the nothing but the smallest continuous location on your hard drive where data is stored. In general, in any of the File System, you store the data as a collection of blocks. Similarly, HDFS stores each file as blocks which are scattered throughout the Apache Hadoop cluster. The default size of each block is 128 MB in Apache Hadoop 2.x (64 MB in Apache Hadoop 1.x) which you can configure as per your requirement.

It is not necessary that in HDFS, each file is stored in exact multiple of the configured block size (128 MB, 256 MB etc.). Let’s take an example where I have a file “example.txt” of size 514 MB as shown in above figure. Suppose that we are using the default configuration of block size, which is 128 MB. Then, how many blocks will be created? 5, Right. The first four blocks will be of 128 MB. But, the last block will be of 2 MB size only.

Now, you must be thinking why we need to have such a huge blocks size i.e. 128 MB?

Well, whenever we talk about HDFS, we talk about huge data sets, i.e. Terabytes and Petabytes of data. So, if we had a block size of let’s say of 4 KB, as in Linux file system, we would be having too many blocks and therefore too much of the metadata. So, managing these no. of blocks and metadata will create huge overhead, which is something, we don’t want.

As you understood what a block is, let us understand how the replication of these blocks takes place in the next section of this HDFS Architecture. Meanwhile, you may check out this video tutorial on HDFS Architecture where all the HDFS Architecture concepts has been discussed in detail:

HDFS provides a reliable way to store huge data in a distributed environment as data blocks. The blocks are also replicated to provide fault tolerance. The default replication factor is 3 which is again configurable. So, as you can see in the figure below where each block is replicated three times and stored on different DataNodes (considering the default replication factor):

Therefore, if you are storing a file of 128 MB in HDFS using the default configuration, you will end up occupying a space of 384 MB (3*128 MB) as the blocks will be replicated three times and each replica will be residing on a different DataNode.

Note: The NameNode collects block report from DataNode periodically to maintain the replication factor. Therefore, whenever a block is over-replicated or under-replicated the NameNode deletes or add replicas as needed.

Anyways, moving ahead, let’s talk more about how HDFS places replica and what is rack awareness? Again, the NameNode also ensures that all the replicas are not stored on the same rack or a single rack. It follows an in-built Rack Awareness Algorithm to reduce latency as well as provide fault tolerance. Considering the replication factor is 3, the Rack Awareness Algorithm says that the first replica of a block will be stored on a local rack and the next two replicas will be stored on a different (remote) rack but, on a different DataNode within that (remote) rack as shown in the figure above. If you have more replicas, the rest of the replicas will be placed on random DataNodes provided not more than two replicas reside on the same rack, if possible. You can even check out the details of Big Data with the Azure Data Engineering Course in Singapore.

This is how an actual Hadoop production cluster looks like. Here, you have multiple racks populated with DataNodes:

So, now you will be thinking why do we need a Rack Awareness algorithm? The reasons are:

Now let’s talk about how the data read/write operations are performed on HDFS. HDFS follows Write Once – Read Many Philosophy. So, you can’t edit files already stored in HDFS. But, you can append new data by re-opening the file. Get a better understanding of the Hadoop Clusters, nodes, and architecture from the Hadoop Admin Training in Chennai.

Suppose a situation where an HDFS client, wants to write a file named “example.txt” of size 248 MB.

Assume that the system block size is configured for 128 MB (default). So, the client will be dividing the file “example.txt” into 2 blocks – one of 128 MB (Block A) and the other of 120 MB (block B).

Now, the following protocol will be followed whenever the data is written into HDFS:

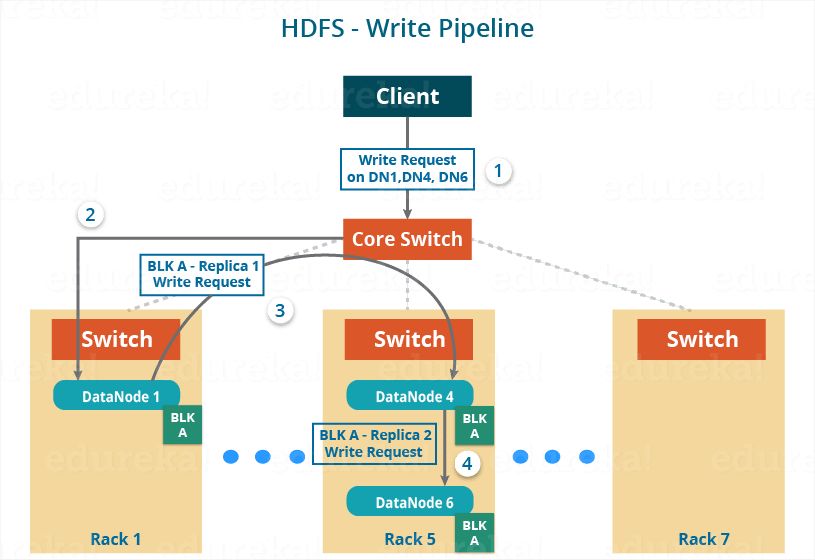

Before writing the blocks, the client confirms whether the DataNodes, present in each of the list of IPs, are ready to receive the data or not. In doing so, the client creates a pipeline for each of the blocks by connecting the individual DataNodes in the respective list for that block. Let us consider Block A. The list of DataNodes provided by the NameNode is:

For Block A, list A = {IP of DataNode 1, IP of DataNode 4, IP of DataNode 6}.

So, for block A, the client will be performing the following steps to create a pipeline:

As the pipeline has been created, the client will push the data into the pipeline. Now, don’t forget that in HDFS, data is replicated based on replication factor. So, here Block A will be stored to three DataNodes as the assumed replication factor is 3. Moving ahead, the client will copy the block (A) to DataNode 1 only. The replication is always done by DataNodes sequentially.

So, the following steps will take place during replication:

Once the block has been copied into all the three DataNodes, a series of acknowledgements will take place to ensure the client and NameNode that the data has been written successfully. Then, the client will finally close the pipeline to end the TCP session.

As shown in the figure below, the acknowledgement happens in the reverse sequence i.e. from DataNode 6 to 4 and then to 1. Finally, the DataNode 1 will push three acknowledgements (including its own) into the pipeline and send it to the client. The client will inform NameNode that data has been written successfully. The NameNode will update its metadata and the client will shut down the pipeline.

Similarly, Block B will also be copied into the DataNodes in parallel with Block A. So, the following things are to be noticed here:

As you can see in the above image, there are two pipelines formed for each block (A and B). Following is the flow of operations that is taking place for each block in their respective pipelines:

HDFS Read architecture is comparatively easy to understand. Let’s take the above example again where the HDFS client wants to read the file “example.txt” now.

Now, following steps will be taking place while reading the file:

While serving read request of the client, HDFS selects the replica which is closest to the client. This reduces the read latency and the bandwidth consumption. Therefore, that replica is selected which resides on the same rack as the reader node, if possible.

Now, you should have a pretty good idea about Apache Hadoop HDFS Architecture. I understand that there is a lot of information here and it may not be easy to get it in one go. I would suggest you to go through it again and I am sure you will find it easier this time. Now, in my next blog, I will be talking about Apache Hadoop HDFS Federation and High Availability Architecture.

Now that you have understood Hadoop architecture, check out the Hadoop training in Chennai by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka Big Data architect course helps learners become expert in HDFS, Yarn, MapReduce, Pig, Hive, HBase, Oozie, Flume and Sqoop using real-time use cases on Retail, Social Media, Aviation, Tourism, Finance domain.

Got a question for us? Please mention it in the comments section and we will get back to you.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co

please send me code and dataset for predicting cat or dog

Thank you for your post. This is excellent information. It is amazing and

wonderful to visit your site.

sas certified advanced analytics professional

It is absolutely well explained and precise but I wish to learn from the scrap then how would I be able to do it, Suggest me please. Thank You!

I have an error: FATAL namenode.NameNode: Failed to start namenode.

Please help

Hey Audrey, we’d like to help you out. Can you elaborate a little on the error? Thanks :)

Excellent and very precise blog !!!

Hey Sagar,

Thank you for appreciating our work. Do browse through our other blogs and let us know how you liked it. Cheers :)

a good and detailed explanation :) Thanks

Hey Fadoua! Thank you for appreciating our work. Do check out our Hadoop certification course here: https://www.edureka.co/big-data-hadoop-training-certification

Hope you find this useful as well :)

What happens if during write operation one of the datanode in the pipeline goes down?

I could imagine following possible scenarios:

– datanode goes down while waiting for data block

– datanode goes down while accepting the datablock

– datanode goes down while while waiting for acknowledgement (i.e. datablock has been copied and but further replication is still going on)

(by going down you can assume to be a machine crash or shutdown)

In HDFS multi block write pipeline figure, can’t we take 2B path same as 2A path, inorder to shorten the pipeline(no. of flow operations) for Block B ??

Very precise explanation !! Thanks

Hey Shweta, thanks for checking out our blog. We’re glad you found it useful.

You might also like our YouTube tutorials; check them out here: https://www.youtube.com/edurekaIN.

Do subscribe to stay posted on upcoming blogs. Cheers!

Hi, I need help to further understand this statement from Rack Awareness vs the block B sequence in HDFS Multi – Block Write Pipeline diagram..

Rack Awareness:

“Considering the replication factor is 3, the Rack Awareness Algorithm says that the first replica of a block will be stored on a local rack and the next two replicas will be stored on a different (remote) rack but, on a different DataNode within that (remote) rack as shown in the figure above. If you have more replicas, the rest of the replicas will be placed on random DataNodes provided not more than two replicas reside on the same rack, if possible.”

HDFS Multi – Block Write Pipeline:

The block B first replica was written in Node 7 in rack 7, then written in Node 9 still in rack 7 and then to Node 3 in rack 1

My understanding, the first replica will be in the local and that the subsequent write will be on another node in a different rack and then to another node to that within that same rack, but the “block B” shows otherwise. Can you help explain this further please?

Thanks you very much in advance.